TR2023-071

EVAL: Explainable Video Anomaly Localization

-

- , "EVAL: Explainable Video Anomaly Localization", IEEE Conference on Computer Vision and Pattern Recognition (CVPR), DOI: 10.1109/CVPR52729.2023.01795, June 2023.BibTeX TR2023-071 PDF Video Software Presentation

- @inproceedings{Singh2023jun,

- author = {Singh, Ashish and Jones, Michael J. and Learned-Miller, Erik},

- title = {{EVAL: Explainable Video Anomaly Localization}},

- booktitle = {IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

- year = 2023,

- month = jun,

- doi = {10.1109/CVPR52729.2023.01795},

- url = {https://www.merl.com/publications/TR2023-071}

- }

- , "EVAL: Explainable Video Anomaly Localization", IEEE Conference on Computer Vision and Pattern Recognition (CVPR), DOI: 10.1109/CVPR52729.2023.01795, June 2023.

-

MERL Contact:

-

Research Areas:

Abstract:

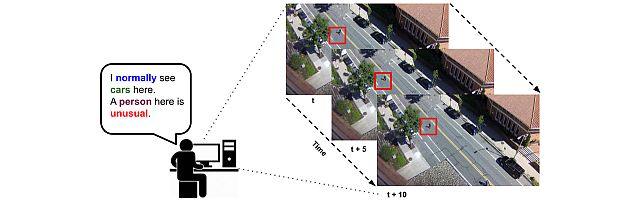

We develop a novel framework for single-scene video anomaly localization that allows for human- understandable reasons for the decisions the system makes. We first learn general representations of objects and their motions (using deep networks) and then use these representations to build a high-level, location-dependent model of any particular scene. This model can be used to detect anomalies in new videos of the same scene. Importantly, our approach is explainable – our high-level appearance and motion features can provide human-understandable reasons for why any part of a video is classified as normal or anomalous. We conduct experiments on standard video anomaly detection datasets (Street Scene, CUHK Avenue, ShanghaiTech and UCSD Ped1, Ped2) and show significant improvements over the previous state-of-the-art. All of our code and extra datasets will be made publicly available.