TR2021-076

Generalized One-Class Learning Using Pairs of Complementary Classifiers

-

- , "Generalized One-Class Learning Using Pairs of Complementary Classifiers", IEEE Transactions on Pattern Analysis and Machine Intelligence, DOI: 10.1109/TPAMI.2021.3092999, June 2021.BibTeX TR2021-076 PDF Software

- @article{Cherian2021jun,

- author = {Cherian, Anoop and Wang, Jue},

- title = {{Generalized One-Class Learning Using Pairs of Complementary Classifiers}},

- journal = {IEEE Transactions on Pattern Analysis and Machine Intelligence},

- year = 2021,

- month = jun,

- doi = {10.1109/TPAMI.2021.3092999},

- url = {https://www.merl.com/publications/TR2021-076}

- }

- , "Generalized One-Class Learning Using Pairs of Complementary Classifiers", IEEE Transactions on Pattern Analysis and Machine Intelligence, DOI: 10.1109/TPAMI.2021.3092999, June 2021.

-

MERL Contact:

-

Research Areas:

Abstract:

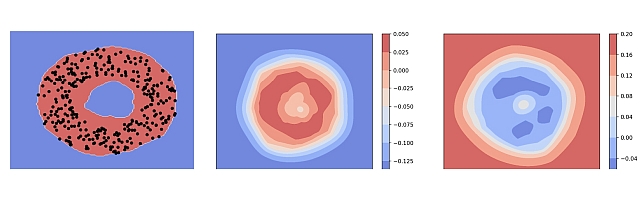

One-class learning is the classic problem of fitting a model to the data for which annotations are available only for a single class. In this paper, we explore novel objectives for one-class learning, which we collectively refer to as Generalized One-class Discriminative Subspaces (GODS). Our key idea is to learn a pair of complementary classifiers to flexibly bound the one-class data distribution, where the data belongs to the positive half-space of one of the classifiers in the complementary pair and to the negative half-space of the other. To avoid redundancy while allowing non-linearity in the classifier decision surfaces, we propose to design each classifier as an orthonormal frame and seek to learn these frames via jointly optimizing for two conflicting objectives, namely: i) to minimize the distance between the two frames, and ii) to maximize the margin between the frames and the data. The learned orthonormal frames will thus characterize a piecewise linear decision surface that allows for efficient inference, while our objectives seek to bound the data within a minimal volume that maximizes the decision margin, thereby robustly capturing the data distribution. We explore several variants of our formulation under different constraints on the constituent classifiers, including kernelized feature maps. We demonstrate the empirical benefits of our approach via experiments on data from several applications in computer vision, such as anomaly detection in video sequences, human poses, and human activities. We also explore the generality and effectiveness of GODS for non-vision tasks via experiments on several UCI datasets, demonstrating state-of-the-art results.