TR2020-083

Can Increasing Input Dimensionality Improve Deep Reinforcement Learning?

-

- , "Can Increasing Input Dimensionality Improve Deep Reinforcement Learning?", International Conference on Machine Learning (ICML), Daumé III , Hal and Singh, Aarti, Eds., June 2020, pp. 7424-7433.BibTeX TR2020-083 PDF Software

- @inproceedings{Ota2020jun,

- author = {Ota, Kei and Oiki, Tomoaki and Jha, Devesh K. and Mariyama, Toshisada and Nikovski, Daniel N.},

- title = {{Can Increasing Input Dimensionality Improve Deep Reinforcement Learning?}},

- booktitle = {International Conference on Machine Learning (ICML)},

- year = 2020,

- editor = {Daumé III , Hal and Singh, Aarti},

- pages = {7424--7433},

- month = jun,

- publisher = {PMLR},

- url = {https://www.merl.com/publications/TR2020-083}

- }

- , "Can Increasing Input Dimensionality Improve Deep Reinforcement Learning?", International Conference on Machine Learning (ICML), Daumé III , Hal and Singh, Aarti, Eds., June 2020, pp. 7424-7433.

-

MERL Contact:

-

Research Areas:

Abstract:

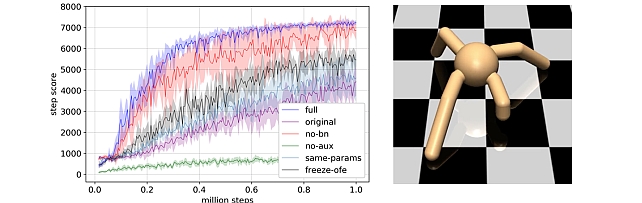

Deep reinforcement learning (RL) algorithms have recently achieved remarkable successes in various sequential decision making tasks, leveraging advances in methods for training large deep networks. However, these methods usually require large amounts of training data, which is often a big problem for real-world applications. One natural question to ask is whether learning good representations for states and using larger networks helps in learning better policies. In this paper, we try to study if increasing input dimensionality helps improve performance and sample efficiency of model-free deep RL algorithms. To do so, we propose an online feature extractor network (OFENet) that uses neural nets to produce good representations to be used as inputs to deep RL algorithms. Even though the high dimensionality of input is usually supposed to make learning of RL agents more difficult, we show that the RL agents in fact learn more efficiently with the high-dimensional representation than with the lower-dimensional state observations. We believe that stronger feature propagation together with larger networks (and thus larger search space) allows RL agents to learn more complex functions of states and thus improves the sample efficiency. Through numerical experiments, we show that the proposed method outperforms several other state-of-the-art algorithms in terms of both sample efficiency and performance. Codes for the proposed method are available at http://www.merl.com/research/license/OFENet.

Software & Data Downloads

Related News & Events

-

NEWS MERL researchers presenting three papers at ICML 2020 Date: July 12, 2020 - July 18, 2020

Where: Vienna, Austria (virtual this year)

MERL Contacts: Anoop Cherian; Daniel N. Nikovski

Research Areas: Artificial Intelligence, Computer Vision, Data Analytics, Dynamical Systems, Machine Learning, Optimization, RoboticsBrief- MERL researchers are presenting three papers at the International Conference on Machine Learning (ICML 2020), which is virtually held this year from 12-18th July. ICML is one of the top-tier conferences in machine learning with an acceptance rate of 22%. The MERL papers are:

1) "Finite-time convergence in Continuous-Time Optimization" by Orlando Romero and Mouhacine Benosman.

2) "Can Increasing Input Dimensionality Improve Deep Reinforcement Learning?" by Kei Ota, Tomoaki Oiki, Devesh Jha, Toshisada Mariyama, and Daniel Nikovski.

3) "Representation Learning Using Adversarially-Contrastive Optimal Transport" by Anoop Cherian and Shuchin Aeron.

- MERL researchers are presenting three papers at the International Conference on Machine Learning (ICML 2020), which is virtually held this year from 12-18th July. ICML is one of the top-tier conferences in machine learning with an acceptance rate of 22%. The MERL papers are:

Related Publication

- @article{Ota2020mar,

- author = {Ota, Kei and Oiki, Tomoaki and Jha, Devesh K. and Mariyama, Toshisada and Nikovski, Daniel},

- title = {{Can Increasing Input Dimensionality Improve Deep Reinforcement Learning?}},

- journal = {arXiv},

- year = 2020,

- month = mar,

- url = {https://arxiv.org/abs/2003.01629}

- }