- Date: Friday, November 7, 2025

Location: Google, New York, NY

MERL Contacts: Jonathan Le Roux; Yoshiki Masuyama

Research Areas: Artificial Intelligence, Machine Learning, Speech & Audio

Brief - SANE 2025, a one-day event gathering researchers and students in speech and audio from the Northeast of the American continent, was held on Friday November 7, 2025 at Google, in New York, NY.

It was the 12th edition in the SANE series of workshops, which started in 2012 and is typically held every year alternately in Boston and New York. Since the first edition, the audience has grown to about 200 participants and 50 posters each year, and SANE has established itself as a vibrant, must-attend event for the speech and audio community across the northeast and beyond.

SANE 2025 featured invited talks by six leading researchers from the Northeast as well as from the wider community: Dan Ellis (Google Deepmind), Leibny Paola Garcia Perera (Johns Hopkins University), Yuki Mitsufuji (Sony AI), Julia Hirschberg (Columbia University), Yoshiki Masuyama (MERL), and Robin Scheibler (Google Deepmind). It also featured a lively poster session with 50 posters.

MERL Speech and Audio Team's Yoshiki Masuyama presented a well-received overview of the team's recent work on "Neural Fields for Spatial Audio Modeling". His talk highlighted how neural fields are reshaping spatial audio research by enabling flexible, data-driven interpolation of head-related transfer functions and room impulse responses. He also discussed the integration of sound-propagation physics into neural field models through physics-informed neural networks, showcasing MERL’s advances at the intersection of acoustics and deep learning.

SANE 2025 was co-organized by Jonathan Le Roux (MERL), Quan Wang (Google Deepmind), and John R. Hershey (Google Deepmind). SANE remained a free event thanks to generous sponsorship by Google, MERL, Apple, Bose, and Carnegie Mellon University.

Slides and videos of the talks are available from the SANE workshop website and via a YouTube playlist.

-

- Date: Sunday, April 6, 2025 - Friday, April 11, 2025

Location: Hyderabad, India

MERL Contacts: Wael H. Ali; Petros T. Boufounos; Radu Corcodel; Chiori Hori; Siddarth Jain; Toshiaki Koike-Akino; Jonathan Le Roux; Yanting Ma; Hassan Mansour; Yoshiki Masuyama; Joshua Rapp; Diego Romeres; Anthony Vetro; Pu (Perry) Wang; Gordon Wichern

Research Areas: Artificial Intelligence, Communications, Computational Sensing, Electronic and Photonic Devices, Machine Learning, Robotics, Signal Processing, Speech & Audio

Brief - MERL has made numerous contributions to both the organization and technical program of ICASSP 2025, which is being held in Hyderabad, India from April 6-11, 2025.

Sponsorship

MERL is proud to be a Silver Patron of the conference and will participate in the student job fair on Thursday, April 10. Please join this session to learn more about employment opportunities at MERL, including openings for research scientists, post-docs, and interns.

MERL is pleased to be the sponsor of two IEEE Awards that will be presented at the conference. We congratulate Prof. Björn Erik Ottersten, the recipient of the 2025 IEEE Fourier Award for Signal Processing, and Prof. Shrikanth Narayanan, the recipient of the 2025 IEEE James L. Flanagan Speech and Audio Processing Award. Both awards will be presented in-person at ICASSP by Anthony Vetro, MERL President & CEO.

Technical Program

MERL is presenting 15 papers in the main conference on a wide range of topics including source separation, sound event detection, sound anomaly detection, speaker diarization, music generation, robot action generation from video, indoor airflow imaging, WiFi sensing, Doppler single-photon Lidar, optical coherence tomography, and radar imaging. Another paper on spatial audio will be presented at the Generative Data Augmentation for Real-World Signal Processing Applications (GenDA) Satellite Workshop.

MERL Researchers Petros Boufounos and Hassan Mansour will present a Tutorial on “Computational Methods in Radar Imaging” in the afternoon of Monday, April 7.

Petros Boufounos will also be giving an industry talk on Thursday April 10 at 12pm, on “A Physics-Informed Approach to Sensing".

About ICASSP

ICASSP is the flagship conference of the IEEE Signal Processing Society, and the world's largest and most comprehensive technical conference focused on the research advances and latest technological development in signal and information processing. The event has been attracting more than 4000 participants each year.

-

- Date & Time: Wednesday, October 30, 2024; 1:00 PM

Speaker: Samuel Clarke, Stanford University

MERL Host: Gordon Wichern

Research Areas: Artificial Intelligence, Machine Learning, Robotics, Speech & Audio

Abstract  Acoustic perception is invaluable to humans and robots in understanding objects and events in their environments. These sounds are dependent on properties of the source, the environment, and the receiver. Many humans possess remarkable intuition both to infer key properties of each of these three aspects from a sound and to form expectations of how these different aspects would affect the sound they hear. In order to equip robots and AI agents with similar if not stronger capabilities, our research has taken a two-fold path. First, we collect high-fidelity datasets in both controlled and uncontrolled environments which capture real sounds of objects and rooms. Second, we introduce differentiable physics-based models that can estimate acoustic properties of objects and rooms from minimal amounts of real audio data, then can predict new sounds from these objects and rooms under novel, “unseen” conditions.

Acoustic perception is invaluable to humans and robots in understanding objects and events in their environments. These sounds are dependent on properties of the source, the environment, and the receiver. Many humans possess remarkable intuition both to infer key properties of each of these three aspects from a sound and to form expectations of how these different aspects would affect the sound they hear. In order to equip robots and AI agents with similar if not stronger capabilities, our research has taken a two-fold path. First, we collect high-fidelity datasets in both controlled and uncontrolled environments which capture real sounds of objects and rooms. Second, we introduce differentiable physics-based models that can estimate acoustic properties of objects and rooms from minimal amounts of real audio data, then can predict new sounds from these objects and rooms under novel, “unseen” conditions.

-

- Date: Thursday, October 17, 2024

Location: Google, Cambridge, MA

MERL Contact: Jonathan Le Roux

Research Areas: Artificial Intelligence, Machine Learning, Speech & Audio

Brief - SANE 2024, a one-day event gathering researchers and students in speech and audio from the Northeast of the American continent, was held on Thursday October 17, 2024 at Google, in Cambridge, MA.

It was the 11th edition in the SANE series of workshops, which started in 2012 and is typically held every year alternately in Boston and New York. Since the first edition, the audience has steadily grown, with a new record of 200 participants and 53 posters in 2024.

SANE 2024 featured invited talks by seven leading researchers from the Northeast as well as from the international community: Quan Wang (Google), Greta Tuckute (MIT), Mark Hamilton (MIT), Bhuvana Ramabhadran (Google), Zhiyao Duan (University of Rochester), and Chris Donahue (Carnegie Mellon University). It also featured a lively poster session with 53 posters.

SANE 2024 was co-organized by Jonathan Le Roux (MERL) and John R. Hershey (Google). SANE remained a free event thanks to generous sponsorship by Google and MERL.

Slides and videos of the talks are available from the SANE workshop website.

-

- Date: Sunday, April 14, 2024 - Friday, April 19, 2024

Location: Seoul, South Korea

MERL Contacts: Petros T. Boufounos; Chiori Hori; Toshiaki Koike-Akino; Jonathan Le Roux; Hassan Mansour; Kieran Parsons; Joshua Rapp; Anthony Vetro; Pu (Perry) Wang; Gordon Wichern

Research Areas: Artificial Intelligence, Computational Sensing, Machine Learning, Robotics, Signal Processing, Speech & Audio

Brief - MERL has made numerous contributions to both the organization and technical program of ICASSP 2024, which is being held in Seoul, Korea from April 14-19, 2024.

Sponsorship and Awards

MERL is proud to be a Bronze Patron of the conference and will participate in the student job fair on Thursday, April 18. Please join this session to learn more about employment opportunities at MERL, including openings for research scientists, post-docs, and interns.

MERL is pleased to be the sponsor of two IEEE Awards that will be presented at the conference. We congratulate Prof. Stéphane G. Mallat, the recipient of the 2024 IEEE Fourier Award for Signal Processing, and Prof. Keiichi Tokuda, the recipient of the 2024 IEEE James L. Flanagan Speech and Audio Processing Award.

Jonathan Le Roux, MERL Speech and Audio Senior Team Leader, will also be recognized during the Awards Ceremony for his recent elevation to IEEE Fellow.

Technical Program

MERL will present 13 papers in the main conference on a wide range of topics including automated audio captioning, speech separation, audio generative models, speech and sound synthesis, spatial audio reproduction, multimodal indoor monitoring, radar imaging, depth estimation, physics-informed machine learning, and integrated sensing and communications (ISAC). Three workshop papers have also been accepted for presentation on audio-visual speaker diarization, music source separation, and music generative models.

Perry Wang is the co-organizer of the Workshop on Signal Processing and Machine Learning Advances in Automotive Radars (SPLAR), held on Sunday, April 14. It features keynote talks from leaders in both academia and industry, peer-reviewed workshop papers, and lightning talks from ICASSP regular tracks on signal processing and machine learning for automotive radar and, more generally, radar perception.

Gordon Wichern will present an invited keynote talk on analyzing and interpreting audio deep learning models at the Workshop on Explainable Machine Learning for Speech and Audio (XAI-SA), held on Monday, April 15. He will also appear in a panel discussion on interpretable audio AI at the workshop.

Perry Wang also co-organizes a two-part special session on Next-Generation Wi-Fi Sensing (SS-L9 and SS-L13) which will be held on Thursday afternoon, April 18. The special session includes papers on PHY-layer oriented signal processing and data-driven deep learning advances, and supports upcoming 802.11bf WLAN Sensing Standardization activities.

Petros Boufounos is participating as a mentor in ICASSP’s Micro-Mentoring Experience Program (MiME).

About ICASSP

ICASSP is the flagship conference of the IEEE Signal Processing Society, and the world's largest and most comprehensive technical conference focused on the research advances and latest technological development in signal and information processing. The event attracts more than 3000 participants.

-

- Date & Time: Wednesday, January 31, 2024; 12:00 PM

Speaker: Greta Tuckute, MIT

Research Areas: Artificial Intelligence, Machine Learning, Speech & Audio

Abstract  Advances in machine learning have led to powerful models for audio and language, proficient in tasks like speech recognition and fluent language generation. Beyond their immense utility in engineering applications, these models offer valuable tools for cognitive science and neuroscience. In this talk, I will demonstrate how these artificial neural network models can be used to understand how the human brain processes language. The first part of the talk will cover how audio neural networks serve as computational accounts for brain activity in the auditory cortex. The second part will focus on the use of large language models, such as those in the GPT family, to non-invasively control brain activity in the human language system.

Advances in machine learning have led to powerful models for audio and language, proficient in tasks like speech recognition and fluent language generation. Beyond their immense utility in engineering applications, these models offer valuable tools for cognitive science and neuroscience. In this talk, I will demonstrate how these artificial neural network models can be used to understand how the human brain processes language. The first part of the talk will cover how audio neural networks serve as computational accounts for brain activity in the auditory cortex. The second part will focus on the use of large language models, such as those in the GPT family, to non-invasively control brain activity in the human language system.

-

- Date: Thursday, October 26, 2023

Location: New York University, Brooklyn, New York, NY

MERL Contacts: Jonathan Le Roux; Gordon Wichern

Research Areas: Artificial Intelligence, Machine Learning, Speech & Audio

Brief - SANE 2023, a one-day event gathering researchers and students in speech and audio from the Northeast of the American continent, was held on Thursday October 26, 2023 at NYU in Brooklyn, New York.

It was the 10th edition in the SANE series of workshops, which started in 2012 and is typically held every year alternately in Boston and New York. Since the first edition, the audience has steadily grown, and SANE 2023 broke SANE 2019's record with 200 participants and 51 posters.

This year's SANE took place in conjunction with the WASPAA workshop, held October 22-25 in upstate New York.

SANE 2023 featured invited talks by seven leading researchers from the Northeast and beyond: Arsha Nagrani (Google), Gaël Richard (Télécom Paris), Gordon Wichern (MERL), Kyunghyun Cho (NYU / Prescient Design), Anna Huang (Google DeepMind / MILA), Wenwu Wang (University of Surrey), and Yuan Gong (MIT). It also featured a lively poster session with 51 posters.

SANE 2023 was co-organized by Jonathan Le Roux (MERL), Juan P. Bello (NYU), and John R. Hershey (Google). SANE remained a free event thanks to generous sponsorship by NYU, MERL, Google, Adobe, Bose, Meta Reality Labs, and Amazon.

Slides and videos of the talks are available from the SANE workshop website.

-

- Date & Time: Thursday, September 28, 2023; 12:00 PM

Speaker: Komei Sugiura, Keio University

MERL Host: Chiori Hori

Research Areas: Artificial Intelligence, Machine Learning, Robotics, Speech & Audio

Abstract  Recent advances in multimodal models that fuse vision and language are revolutionizing robotics. In this lecture, I will begin by introducing recent multimodal foundational models and their applications in robotics. The second topic of this talk will address our recent work on multimodal language processing in robotics. The shortage of home care workers has become a pressing societal issue, and the use of domestic service robots (DSRs) to assist individuals with disabilities is seen as a possible solution. I will present our work on DSRs that are capable of open-vocabulary mobile manipulation, referring expression comprehension and segmentation models for everyday objects, and future captioning methods for cooking videos and DSRs.

Recent advances in multimodal models that fuse vision and language are revolutionizing robotics. In this lecture, I will begin by introducing recent multimodal foundational models and their applications in robotics. The second topic of this talk will address our recent work on multimodal language processing in robotics. The shortage of home care workers has become a pressing societal issue, and the use of domestic service robots (DSRs) to assist individuals with disabilities is seen as a possible solution. I will present our work on DSRs that are capable of open-vocabulary mobile manipulation, referring expression comprehension and segmentation models for everyday objects, and future captioning methods for cooking videos and DSRs.

-

- Date: Sunday, June 4, 2023 - Saturday, June 10, 2023

Location: Rhodes Island, Greece

MERL Contacts: Petros T. Boufounos; Toshiaki Koike-Akino; Jonathan Le Roux; Dehong Liu; Suhas Anand Lohit; Yanting Ma; Hassan Mansour; Joshua Rapp; Anthony Vetro; Pu (Perry) Wang; Gordon Wichern

Research Areas: Artificial Intelligence, Computational Sensing, Machine Learning, Signal Processing, Speech & Audio

Brief - MERL has made numerous contributions to both the organization and technical program of ICASSP 2023, which is being held in Rhodes Island, Greece from June 4-10, 2023.

Organization

Petros Boufounos is serving as General Co-Chair of the conference this year, where he has been involved in all aspects of conference planning and execution.

Perry Wang is the organizer of a special session on Radar-Assisted Perception (RAP), which will be held on Wednesday, June 7. The session will feature talks on signal processing and deep learning for radar perception, pose estimation, and mutual interference mitigation with speakers from both academia (Carnegie Mellon University, Virginia Tech, University of Illinois Urbana-Champaign) and industry (Mitsubishi Electric, Bosch, Waveye).

Anthony Vetro is the co-organizer of the Workshop on Signal Processing for Autonomous Systems (SPAS), which will be held on Monday, June 5, and feature invited talks from leaders in both academia and industry on timely topics related to autonomous systems.

Sponsorship

MERL is proud to be a Silver Patron of the conference and will participate in the student job fair on Thursday, June 8. Please join this session to learn more about employment opportunities at MERL, including openings for research scientists, post-docs, and interns.

MERL is pleased to be the sponsor of two IEEE Awards that will be presented at the conference. We congratulate Prof. Rabab Ward, the recipient of the 2023 IEEE Fourier Award for Signal Processing, and Prof. Alexander Waibel, the recipient of the 2023 IEEE James L. Flanagan Speech and Audio Processing Award.

Technical Program

MERL is presenting 13 papers in the main conference on a wide range of topics including source separation and speech enhancement, radar imaging, depth estimation, motor fault detection, time series recovery, and point clouds. One workshop paper has also been accepted for presentation on self-supervised music source separation.

Perry Wang has been invited to give a keynote talk on Wi-Fi sensing and related standards activities at the Workshop on Integrated Sensing and Communications (ISAC), which will be held on Sunday, June 4.

Additionally, Anthony Vetro will present a Perspective Talk on Physics-Grounded Machine Learning, which is scheduled for Thursday, June 8.

About ICASSP

ICASSP is the flagship conference of the IEEE Signal Processing Society, and the world's largest and most comprehensive technical conference focused on the research advances and latest technological development in signal and information processing. The event attracts more than 2000 participants each year.

-

- Date & Time: Tuesday, April 25, 2023; 11:00 AM

Speaker: Dan Stowell, Tilburg University / Naturalis Biodiversity Centre

MERL Host: Gordon Wichern

Research Areas: Artificial Intelligence, Machine Learning, Speech & Audio

Abstract  Machine learning can be used to identify animals from their sound. This could be a valuable tool for biodiversity monitoring, and for understanding animal behaviour and communication. But to get there, we need very high accuracy at fine-grained acoustic distinctions across hundreds of categories in diverse conditions. In our group we are studying how to achieve this at continental scale. I will describe aspects of bioacoustic data that challenge even the latest deep learning workflows, and our work to address this. Methods covered include adaptive feature representations, deep embeddings and few-shot learning.

Machine learning can be used to identify animals from their sound. This could be a valuable tool for biodiversity monitoring, and for understanding animal behaviour and communication. But to get there, we need very high accuracy at fine-grained acoustic distinctions across hundreds of categories in diverse conditions. In our group we are studying how to achieve this at continental scale. I will describe aspects of bioacoustic data that challenge even the latest deep learning workflows, and our work to address this. Methods covered include adaptive feature representations, deep embeddings and few-shot learning.

-

- Date & Time: Monday, December 12, 2022; 1:00pm-5:30pm ET

Location: Mitsubishi Electric Research Laboratories (MERL)/Virtual

Research Areas: Applied Physics, Artificial Intelligence, Communications, Computational Sensing, Computer Vision, Control, Data Analytics, Dynamical Systems, Electric Systems, Electronic and Photonic Devices, Machine Learning, Multi-Physical Modeling, Optimization, Robotics, Signal Processing, Speech & Audio, Digital Video

Brief - Join MERL's virtual open house on December 12th, 2022! Featuring a keynote, live sessions, research area booths, and opportunities to interact with our research team. Discover who we are and what we do, and learn about internship and employment opportunities.

-

- Date: Thursday, October 6, 2022

Location: Kendall Square, Cambridge, MA

MERL Contacts: Anoop Cherian; Jonathan Le Roux

Research Areas: Artificial Intelligence, Computer Vision, Machine Learning, Speech & Audio

Brief - SANE 2022, a one-day event gathering researchers and students in speech and audio from the Northeast of the American continent, was held on Thursday October 6, 2022 in Kendall Square, Cambridge, MA.

It was the 9th edition in the SANE series of workshops, which started in 2012 and was held every year alternately in Boston and New York until 2019. Since the first edition, the audience has grown to a record 200 participants and 45 posters in 2019. After a 2-year hiatus due to the pandemic, SANE returned with an in-person gathering of 140 students and researchers.

SANE 2022 featured invited talks by seven leading researchers from the Northeast: Rupal Patel (Northeastern/VocaliD), Wei-Ning Hsu (Meta FAIR), Scott Wisdom (Google), Tara Sainath (Google), Shinji Watanabe (CMU), Anoop Cherian (MERL), and Chuang Gan (UMass Amherst/MIT-IBM Watson AI Lab). It also featured a lively poster session with 29 posters.

SANE 2022 was co-organized by Jonathan Le Roux (MERL), Arnab Ghoshal (Apple), John Hershey (Google), and Shinji Watanabe (CMU). SANE remained a free event thanks to generous sponsorship by Bose, Google, MERL, and Microsoft.

Slides and videos of the talks will be released on the SANE workshop website.

-

- Date & Time: Tuesday, September 6, 2022; 12:00 PM EDT

Speaker: Chuang Gan, UMass Amherst & MIT-IBM Watson AI Lab

MERL Host: Jonathan Le Roux

Research Areas: Artificial Intelligence, Computer Vision, Machine Learning, Speech & Audio

Abstract  Human sensory perception of the physical world is rich and multimodal and can flexibly integrate input from all five sensory modalities -- vision, touch, smell, hearing, and taste. However, in AI, attention has primarily focused on visual perception. In this talk, I will introduce my efforts in connecting vision with sound, which will allow machine perception systems to see objects and infer physics from multi-sensory data. In the first part of my talk, I will introduce a. self-supervised approach that could learn to parse images and separate the sound sources by watching and listening to unlabeled videos without requiring additional manual supervision. In the second part of my talk, I will show we may further infer the underlying causal structure in 3D environments through visual and auditory observations. This enables agents to seek the sound source of repeating environmental sound (e.g., alarm) or identify what object has fallen, and where, from an intermittent impact sound.

Human sensory perception of the physical world is rich and multimodal and can flexibly integrate input from all five sensory modalities -- vision, touch, smell, hearing, and taste. However, in AI, attention has primarily focused on visual perception. In this talk, I will introduce my efforts in connecting vision with sound, which will allow machine perception systems to see objects and infer physics from multi-sensory data. In the first part of my talk, I will introduce a. self-supervised approach that could learn to parse images and separate the sound sources by watching and listening to unlabeled videos without requiring additional manual supervision. In the second part of my talk, I will show we may further infer the underlying causal structure in 3D environments through visual and auditory observations. This enables agents to seek the sound source of repeating environmental sound (e.g., alarm) or identify what object has fallen, and where, from an intermittent impact sound.

-

- Date & Time: Tuesday, March 1, 2022; 1:00 PM EST

Speaker: David Harwath, The University of Texas at Austin

MERL Host: Chiori Hori

Research Areas: Artificial Intelligence, Machine Learning, Speech & Audio

Abstract  Humans learn spoken language and visual perception at an early age by being immersed in the world around them. Why can't computers do the same? In this talk, I will describe our ongoing work to develop methodologies for grounding continuous speech signals at the raw waveform level to natural image scenes. I will first present self-supervised models capable of discovering discrete, hierarchical structure (words and sub-word units) in the speech signal. Instead of conventional annotations, these models learn from correspondences between speech sounds and visual patterns such as objects and textures. Next, I will demonstrate how these discrete units can be used as a drop-in replacement for text transcriptions in an image captioning system, enabling us to directly synthesize spoken descriptions of images without the need for text as an intermediate representation. Finally, I will describe our latest work on Transformer-based models of visually-grounded speech. These models significantly outperform the prior state of the art on semantic speech-to-image retrieval tasks, and also learn representations that are useful for a multitude of other speech processing tasks.

Humans learn spoken language and visual perception at an early age by being immersed in the world around them. Why can't computers do the same? In this talk, I will describe our ongoing work to develop methodologies for grounding continuous speech signals at the raw waveform level to natural image scenes. I will first present self-supervised models capable of discovering discrete, hierarchical structure (words and sub-word units) in the speech signal. Instead of conventional annotations, these models learn from correspondences between speech sounds and visual patterns such as objects and textures. Next, I will demonstrate how these discrete units can be used as a drop-in replacement for text transcriptions in an image captioning system, enabling us to directly synthesize spoken descriptions of images without the need for text as an intermediate representation. Finally, I will describe our latest work on Transformer-based models of visually-grounded speech. These models significantly outperform the prior state of the art on semantic speech-to-image retrieval tasks, and also learn representations that are useful for a multitude of other speech processing tasks.

-

- Date & Time: Thursday, December 9, 2021; 1:00pm - 5:30pm EST

Location: Virtual Event

Speaker: Prof. Melanie Zeilinger, ETH

Research Areas: Applied Physics, Artificial Intelligence, Communications, Computational Sensing, Computer Vision, Control, Data Analytics, Dynamical Systems, Electric Systems, Electronic and Photonic Devices, Machine Learning, Multi-Physical Modeling, Optimization, Robotics, Signal Processing, Speech & Audio, Digital Video, Human-Computer Interaction, Information Security

Brief - MERL is excited to announce the second keynote speaker for our Virtual Open House 2021:

Prof. Melanie Zeilinger from ETH .

Our virtual open house will take place on December 9, 2021, 1:00pm - 5:30pm (EST).

Join us to learn more about who we are, what we do, and discuss our internship and employment opportunities. Prof. Zeilinger's talk is scheduled for 3:15pm - 3:45pm (EST).

Registration: https://mailchi.mp/merl/merlvoh2021

Keynote Title: Control Meets Learning - On Performance, Safety and User Interaction

Abstract: With increasing sensing and communication capabilities, physical systems today are becoming one of the largest generators of data, making learning a central component of autonomous control systems. While this paradigm shift offers tremendous opportunities to address new levels of system complexity, variability and user interaction, it also raises fundamental questions of learning in a closed-loop dynamical control system. In this talk, I will present some of our recent results showing how even safety-critical systems can leverage the potential of data. I will first briefly present concepts for using learning for automatic controller design and for a new safety framework that can equip any learning-based controller with safety guarantees. The second part will then discuss how expert and user information can be utilized to optimize system performance, where I will particularly highlight an approach developed together with MERL for personalizing the motion planning in autonomous driving to the individual driving style of a passenger.

-

- Date & Time: Thursday, December 9, 2021; 1:00pm - 5:30pm EST

Location: Virtual Event

Speaker: Prof. Ashok Veeraraghavan, Rice University

Research Areas: Applied Physics, Artificial Intelligence, Communications, Computational Sensing, Computer Vision, Control, Data Analytics, Dynamical Systems, Electric Systems, Electronic and Photonic Devices, Machine Learning, Multi-Physical Modeling, Optimization, Robotics, Signal Processing, Speech & Audio, Digital Video, Human-Computer Interaction, Information Security

Brief - MERL is excited to announce the first keynote speaker for our Virtual Open House 2021:

Prof. Ashok Veeraraghavan from Rice University.

Our virtual open house will take place on December 9, 2021, 1:00pm - 5:30pm (EST).

Join us to learn more about who we are, what we do, and discuss our internship and employment opportunities. Prof. Veeraraghavan's talk is scheduled for 1:15pm - 1:45pm (EST).

Registration: https://mailchi.mp/merl/merlvoh2021

Keynote Title: Computational Imaging: Beyond the limits imposed by lenses.

Abstract: The lens has long been a central element of cameras, since its early use in the mid-nineteenth century by Niepce, Talbot, and Daguerre. The role of the lens, from the Daguerrotype to modern digital cameras, is to refract light to achieve a one-to-one mapping between a point in the scene and a point on the sensor. This effect enables the sensor to compute a particular two-dimensional (2D) integral of the incident 4D light-field. We propose a radical departure from this practice and the many limitations it imposes. In the talk we focus on two inter-related research projects that attempt to go beyond lens-based imaging.

First, we discuss our lab’s recent efforts to build flat, extremely thin imaging devices by replacing the lens in a conventional camera with an amplitude mask and computational reconstruction algorithms. These lensless cameras, called FlatCams can be less than a millimeter in thickness and enable applications where size, weight, thickness or cost are the driving factors. Second, we discuss high-resolution, long-distance imaging using Fourier Ptychography, where the need for a large aperture aberration corrected lens is replaced by a camera array and associated phase retrieval algorithms resulting again in order of magnitude reductions in size, weight and cost. Finally, I will spend a few minutes discussing how the wholistic computational imaging approach can be used to create ultra-high-resolution wavefront sensors.

-

- Date & Time: Thursday, December 9, 2021; 100pm-5:30pm (EST)

Location: Virtual Event

Research Areas: Applied Physics, Artificial Intelligence, Communications, Computational Sensing, Computer Vision, Control, Data Analytics, Dynamical Systems, Electric Systems, Electronic and Photonic Devices, Machine Learning, Multi-Physical Modeling, Optimization, Robotics, Signal Processing, Speech & Audio, Digital Video, Human-Computer Interaction, Information Security

Brief - Mitsubishi Electric Research Laboratories cordially invites you to join our Virtual Open House, on December 9, 2021, 1:00pm - 5:30pm (EST).

The event will feature keynotes, live sessions, research area booths, and time for open interactions with our researchers. Join us to learn more about who we are, what we do, and discuss our internship and employment opportunities.

Registration: https://mailchi.mp/merl/merlvoh2021

-

- Date & Time: Tuesday, September 28, 2021; 1:00 PM EST

Speaker: Dr. Ruohan Gao, Stanford University

MERL Host: Gordon Wichern

Research Areas: Computer Vision, Machine Learning, Speech & Audio

Abstract  While computer vision has made significant progress by "looking" — detecting objects, actions, or people based on their appearance — it often does not listen. Yet cognitive science tells us that perception develops by making use of all our senses without intensive supervision. Towards this goal, in this talk I will present my research on audio-visual learning — We disentangle object sounds from unlabeled video, use audio as an efficient preview for action recognition in untrimmed video, decode the monaural soundtrack into its binaural counterpart by injecting visual spatial information, and use echoes to interact with the environment for spatial image representation learning. Together, these are steps towards multimodal understanding of the visual world, where audio serves as both the semantic and spatial signals. In the end, I will also briefly talk about our latest work on multisensory learning for robotics.

While computer vision has made significant progress by "looking" — detecting objects, actions, or people based on their appearance — it often does not listen. Yet cognitive science tells us that perception develops by making use of all our senses without intensive supervision. Towards this goal, in this talk I will present my research on audio-visual learning — We disentangle object sounds from unlabeled video, use audio as an efficient preview for action recognition in untrimmed video, decode the monaural soundtrack into its binaural counterpart by injecting visual spatial information, and use echoes to interact with the environment for spatial image representation learning. Together, these are steps towards multimodal understanding of the visual world, where audio serves as both the semantic and spatial signals. In the end, I will also briefly talk about our latest work on multisensory learning for robotics.

-

- Date & Time: Wednesday, December 9, 2020; 1:00-5:00PM EST

Location: Virtual

MERL Contacts: Elizabeth Phillips; Anthony Vetro

Research Areas: Applied Physics, Artificial Intelligence, Communications, Computational Sensing, Computer Vision, Control, Data Analytics, Dynamical Systems, Electric Systems, Electronic and Photonic Devices, Machine Learning, Multi-Physical Modeling, Optimization, Robotics, Signal Processing, Speech & Audio

-

- Date: Thursday, October 24, 2019

Location: Columbia University, New York, NY

MERL Contact: Jonathan Le Roux

Research Areas: Artificial Intelligence, Machine Learning, Speech & Audio

Brief - SANE 2019, a one-day event gathering researchers and students in speech and audio from the Northeast of the American continent, was held on Thursday October 24, 2019 at Columbia University, in New York City.

It was the 8th edition in the SANE series of workshops, which started in 2012 and has been held every year alternately in Boston and New York. Since the first edition, the audience has steadily grown, with a previous record of 180 participants in 2017 and 2018, and a new record of 200 participants and 45 posters in 2019.

This year's SANE conveniently took place in conjunction both with the WASPAA workshop, held October 20-23 in upstate New York, and with the DCASE workshop, held October 25-26 in Brooklyn, NY, for a full week of speech and audio enlightenment and delight.

SANE 2019 featured invited talks by seven leading researchers from the Northeast as well as from the international community: Brian Kingsbury (IBM TJ Watson Research Center), Kristen Grauman (University of Texas at Austin, Facebook AI Research), Simon Doclo (University of Oldenburg), Karen Livescu (TTI-Chicago), Gabriel Synnaeve (Facebook AI Research), Hirokazu Kameoka (NTT Communication Science Laboratories), Ron Weiss (Google Brain). It also featured live demonstrations by Jonathan Le Roux (MERL) and Andrew Titus (Apple), and a lively poster session with 45 posters in Columbia University's Low Memorial Library, a National Historic Landmark.

SANE 2019 was co-organized by Jonathan Le Roux (MERL), Nima Mesgarani (Columbia), John R. Hershey (Google), Shinji Watanabe (Johns Hopkins), and Steven J. Rennie (Pryon Inc.). SANE remained a free event thanks to generous sponsorship by Columbia University, MERL, Google, Apple, and Amazon.

Slides and videos of the talks are available from the SANE workshop website.

-

- Date & Time: Thursday, November 29, 2018; 4-6pm

Location: 201 Broadway, 8th floor, Cambridge, MA

MERL Contacts: Elizabeth Phillips; Anthony Vetro

Research Areas: Applied Physics, Artificial Intelligence, Communications, Computational Sensing, Computer Vision, Control, Data Analytics, Dynamical Systems, Electric Systems, Electronic and Photonic Devices, Machine Learning, Multi-Physical Modeling, Optimization, Robotics, Signal Processing, Speech & Audio

Brief - Snacks, demos, science: On Thursday 11/29, Mitsubishi Electric Research Labs (MERL) will host an open house for graduate+ students interested in internships, post-docs, and research scientist positions. The event will be held from 4-6pm and will feature demos & short presentations in our main areas of research including artificial intelligence, robotics, computer vision, speech processing, optimization, machine learning, data analytics, signal processing, communications, sensing, control and dynamical systems, as well as multi-physyical modeling and electronic devices. MERL is a high impact publication-oriented research lab with very extensive internship and university collaboration programs. Most internships lead to publication; many of our interns and staff have gone on to notable careers at MERL and in academia. Come mix with our researchers, see our state of the art technologies, and learn about our research opportunities. Dress code: casual, with resumes.

Pre-registration for the event is strongly encouraged:

merlopenhouse.eventbrite.com

Current internship and employment openings:

www.merl.com/internship/openings

www.merl.com/employment/employment

Information about working at MERL:

www.merl.com/employment.

-

- Date: Thursday, October 18, 2018

Location: Google, Cambridge, MA

MERL Contact: Jonathan Le Roux

Research Area: Speech & Audio

Brief - SANE 2018, a one-day event gathering researchers and students in speech and audio from the Northeast of the American continent, will be held on Thursday October 18, 2018 at Google, in Cambridge, MA. MERL is one of the organizers and sponsors of the workshop.

It is the 7th edition in the SANE series of workshops, which started at MERL in 2012. Since the first edition, the audience has steadily grown, with a record 180 participants in 2017.

SANE 2018 will feature invited talks by leading researchers from the Northeast, as well as from the international community. It will also feature a lively poster session, open to both students and researchers.

-

- Date & Time: Tuesday, March 6, 2018; 12:00 PM

Speaker: Scott Wisdom, Affectiva

MERL Host: Jonathan Le Roux

Research Area: Speech & Audio

Abstract  Recurrent neural networks (RNNs) are effective, data-driven models for sequential data, such as audio and speech signals. However, like many deep networks, RNNs are essentially black boxes; though they are effective, their weights and architecture are not directly interpretable by practitioners. A major component of my dissertation research is explaining the success of RNNs and constructing new RNN architectures through the process of "deep unfolding," which can construct and explain deep network architectures using an equivalence to inference in statistical models. Deep unfolding yields principled initializations for training deep networks, provides insight into their effectiveness, and assists with interpretation of what these networks learn.

Recurrent neural networks (RNNs) are effective, data-driven models for sequential data, such as audio and speech signals. However, like many deep networks, RNNs are essentially black boxes; though they are effective, their weights and architecture are not directly interpretable by practitioners. A major component of my dissertation research is explaining the success of RNNs and constructing new RNN architectures through the process of "deep unfolding," which can construct and explain deep network architectures using an equivalence to inference in statistical models. Deep unfolding yields principled initializations for training deep networks, provides insight into their effectiveness, and assists with interpretation of what these networks learn.

In particular, I will show how RNNs with rectified linear units and residual connections are a particular deep unfolding of a sequential version of the iterative shrinkage-thresholding algorithm (ISTA), a simple and classic algorithm for solving L1-regularized least-squares. This equivalence allows interpretation of state-of-the-art unitary RNNs (uRNNs) as an unfolded sparse coding algorithm. I will also describe a new type of RNN architecture called deep recurrent nonnegative matrix factorization (DR-NMF). DR-NMF is an unfolding of a sparse NMF model of nonnegative spectrograms for audio source separation. Both of these networks outperform conventional LSTM networks while also providing interpretability for practitioners.

-

- Date & Time: Friday, February 2, 2018; 12:00

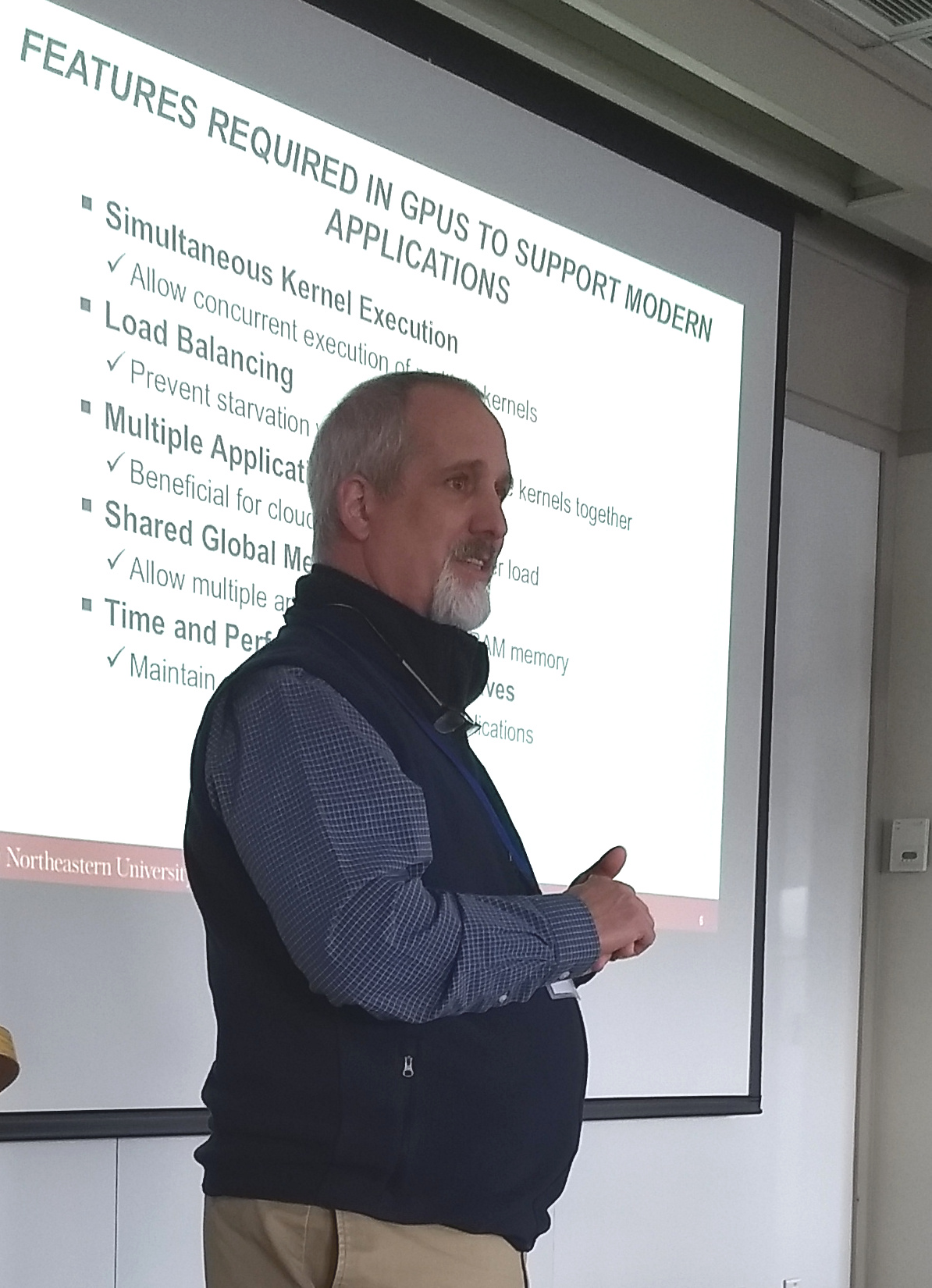

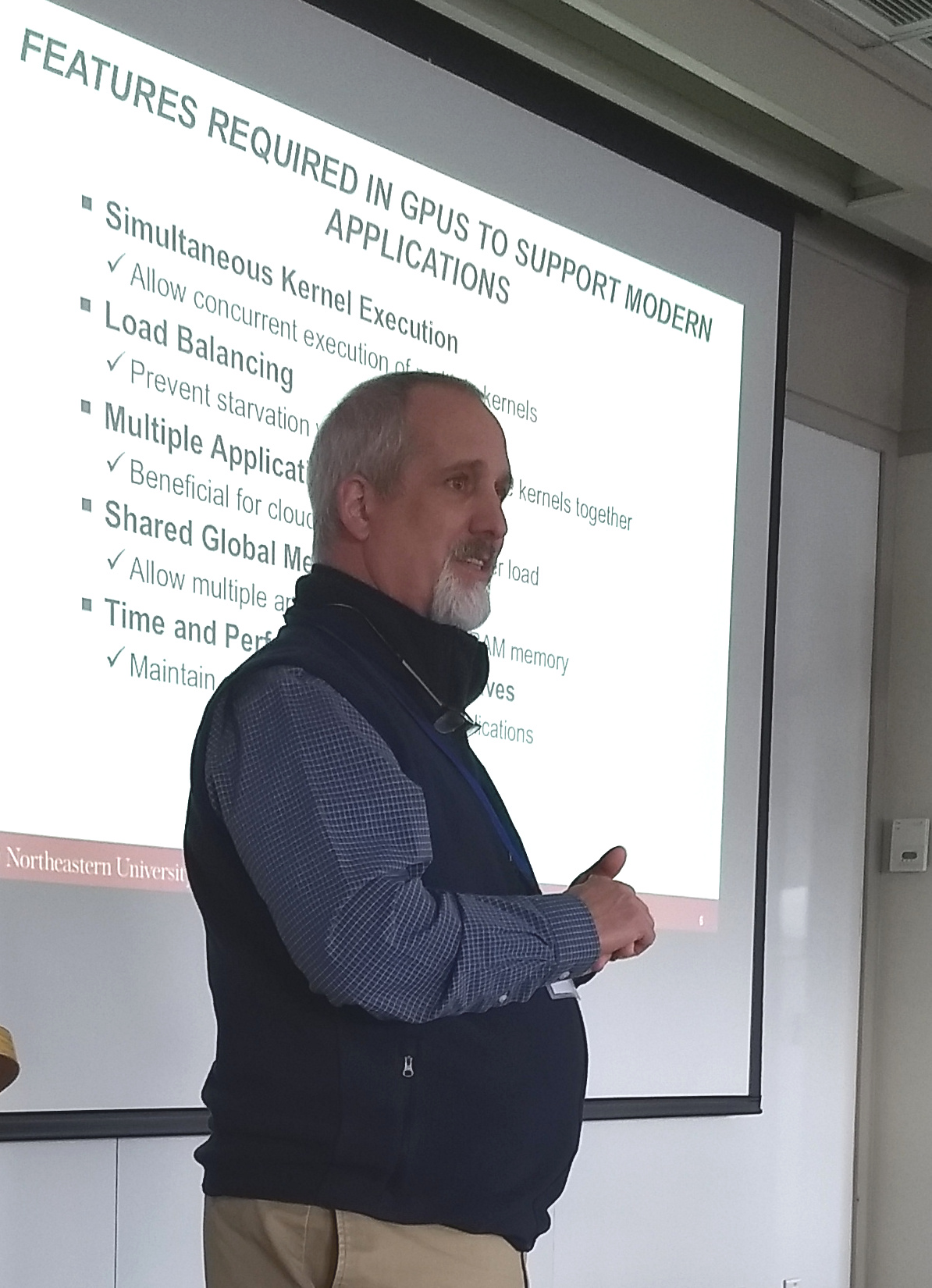

Speaker: Dr. David Kaeli, Northeastern University

MERL Host: Abraham Goldsmith

Research Areas: Control, Optimization, Machine Learning, Speech & Audio

Abstract  GPU computing is alive and well! The GPU has allowed researchers to overcome a number of computational barriers in important problem domains. But still, there remain challenges to use a GPU to target more general purpose applications. GPUs achieve impressive speedups when compared to CPUs, since GPUs have a large number of compute cores and high memory bandwidth. Recent GPU performance is approaching 10 teraflops of single precision performance on a single device. In this talk we will discuss current trends with GPUs, including some advanced features that allow them exploit multi-context grains of parallelism. Further, we consider how GPUs can be treated as cloud-based resources, enabling a GPU-enabled server to deliver HPC cloud services by leveraging virtualization and collaborative filtering. Finally, we argue for for new heterogeneous workloads and discuss the role of the Heterogeneous Systems Architecture (HSA), a standard that further supports integration of the CPU and GPU into a common framework. We present a new class of benchmarks specifically tailored to evaluate the benefits of features supported in the new HSA programming model.

GPU computing is alive and well! The GPU has allowed researchers to overcome a number of computational barriers in important problem domains. But still, there remain challenges to use a GPU to target more general purpose applications. GPUs achieve impressive speedups when compared to CPUs, since GPUs have a large number of compute cores and high memory bandwidth. Recent GPU performance is approaching 10 teraflops of single precision performance on a single device. In this talk we will discuss current trends with GPUs, including some advanced features that allow them exploit multi-context grains of parallelism. Further, we consider how GPUs can be treated as cloud-based resources, enabling a GPU-enabled server to deliver HPC cloud services by leveraging virtualization and collaborative filtering. Finally, we argue for for new heterogeneous workloads and discuss the role of the Heterogeneous Systems Architecture (HSA), a standard that further supports integration of the CPU and GPU into a common framework. We present a new class of benchmarks specifically tailored to evaluate the benefits of features supported in the new HSA programming model.

-

- Date: Sunday, December 10, 2017

Location: Hyatt Regency, Long Beach, CA

MERL Contact: Chiori Hori

Research Area: Speech & Audio

Brief - MERL researcher Chiori Hori led the organization of the 6th edition of the Dialog System Technology Challenges (DSTC6). This year's edition of DSTC is split into three tracks: End-to-End Goal Oriented Dialog Learning, End-to-End Conversation Modeling, and Dialogue Breakdown Detection. A total of 23 teams from all over the world competed in the various tracks, and will meet at the Hyatt Regency in Long Beach, CA, USA on December 10 to present their results at a dedicated workshop colocated with NIPS 2017.

MERL's Speech and Audio Team and Mitsubishi Electric Corporation jointly submitted a set of systems to the End-to-End Conversation Modeling Track, obtaining the best rank among 19 submissions in terms of objective metrics.

-

Acoustic perception is invaluable to humans and robots in understanding objects and events in their environments. These sounds are dependent on properties of the source, the environment, and the receiver. Many humans possess remarkable intuition both to infer key properties of each of these three aspects from a sound and to form expectations of how these different aspects would affect the sound they hear. In order to equip robots and AI agents with similar if not stronger capabilities, our research has taken a two-fold path. First, we collect high-fidelity datasets in both controlled and uncontrolled environments which capture real sounds of objects and rooms. Second, we introduce differentiable physics-based models that can estimate acoustic properties of objects and rooms from minimal amounts of real audio data, then can predict new sounds from these objects and rooms under novel, “unseen” conditions.

Acoustic perception is invaluable to humans and robots in understanding objects and events in their environments. These sounds are dependent on properties of the source, the environment, and the receiver. Many humans possess remarkable intuition both to infer key properties of each of these three aspects from a sound and to form expectations of how these different aspects would affect the sound they hear. In order to equip robots and AI agents with similar if not stronger capabilities, our research has taken a two-fold path. First, we collect high-fidelity datasets in both controlled and uncontrolled environments which capture real sounds of objects and rooms. Second, we introduce differentiable physics-based models that can estimate acoustic properties of objects and rooms from minimal amounts of real audio data, then can predict new sounds from these objects and rooms under novel, “unseen” conditions. Advances in machine learning have led to powerful models for audio and language, proficient in tasks like speech recognition and fluent language generation. Beyond their immense utility in engineering applications, these models offer valuable tools for cognitive science and neuroscience. In this talk, I will demonstrate how these artificial neural network models can be used to understand how the human brain processes language. The first part of the talk will cover how audio neural networks serve as computational accounts for brain activity in the auditory cortex. The second part will focus on the use of large language models, such as those in the GPT family, to non-invasively control brain activity in the human language system.

Advances in machine learning have led to powerful models for audio and language, proficient in tasks like speech recognition and fluent language generation. Beyond their immense utility in engineering applications, these models offer valuable tools for cognitive science and neuroscience. In this talk, I will demonstrate how these artificial neural network models can be used to understand how the human brain processes language. The first part of the talk will cover how audio neural networks serve as computational accounts for brain activity in the auditory cortex. The second part will focus on the use of large language models, such as those in the GPT family, to non-invasively control brain activity in the human language system. Recent advances in multimodal models that fuse vision and language are revolutionizing robotics. In this lecture, I will begin by introducing recent multimodal foundational models and their applications in robotics. The second topic of this talk will address our recent work on multimodal language processing in robotics. The shortage of home care workers has become a pressing societal issue, and the use of domestic service robots (DSRs) to assist individuals with disabilities is seen as a possible solution. I will present our work on DSRs that are capable of open-vocabulary mobile manipulation, referring expression comprehension and segmentation models for everyday objects, and future captioning methods for cooking videos and DSRs.

Recent advances in multimodal models that fuse vision and language are revolutionizing robotics. In this lecture, I will begin by introducing recent multimodal foundational models and their applications in robotics. The second topic of this talk will address our recent work on multimodal language processing in robotics. The shortage of home care workers has become a pressing societal issue, and the use of domestic service robots (DSRs) to assist individuals with disabilities is seen as a possible solution. I will present our work on DSRs that are capable of open-vocabulary mobile manipulation, referring expression comprehension and segmentation models for everyday objects, and future captioning methods for cooking videos and DSRs. Machine learning can be used to identify animals from their sound. This could be a valuable tool for biodiversity monitoring, and for understanding animal behaviour and communication. But to get there, we need very high accuracy at fine-grained acoustic distinctions across hundreds of categories in diverse conditions. In our group we are studying how to achieve this at continental scale. I will describe aspects of bioacoustic data that challenge even the latest deep learning workflows, and our work to address this. Methods covered include adaptive feature representations, deep embeddings and few-shot learning.

Machine learning can be used to identify animals from their sound. This could be a valuable tool for biodiversity monitoring, and for understanding animal behaviour and communication. But to get there, we need very high accuracy at fine-grained acoustic distinctions across hundreds of categories in diverse conditions. In our group we are studying how to achieve this at continental scale. I will describe aspects of bioacoustic data that challenge even the latest deep learning workflows, and our work to address this. Methods covered include adaptive feature representations, deep embeddings and few-shot learning. Human sensory perception of the physical world is rich and multimodal and can flexibly integrate input from all five sensory modalities -- vision, touch, smell, hearing, and taste. However, in AI, attention has primarily focused on visual perception. In this talk, I will introduce my efforts in connecting vision with sound, which will allow machine perception systems to see objects and infer physics from multi-sensory data. In the first part of my talk, I will introduce a. self-supervised approach that could learn to parse images and separate the sound sources by watching and listening to unlabeled videos without requiring additional manual supervision. In the second part of my talk, I will show we may further infer the underlying causal structure in 3D environments through visual and auditory observations. This enables agents to seek the sound source of repeating environmental sound (e.g., alarm) or identify what object has fallen, and where, from an intermittent impact sound.

Human sensory perception of the physical world is rich and multimodal and can flexibly integrate input from all five sensory modalities -- vision, touch, smell, hearing, and taste. However, in AI, attention has primarily focused on visual perception. In this talk, I will introduce my efforts in connecting vision with sound, which will allow machine perception systems to see objects and infer physics from multi-sensory data. In the first part of my talk, I will introduce a. self-supervised approach that could learn to parse images and separate the sound sources by watching and listening to unlabeled videos without requiring additional manual supervision. In the second part of my talk, I will show we may further infer the underlying causal structure in 3D environments through visual and auditory observations. This enables agents to seek the sound source of repeating environmental sound (e.g., alarm) or identify what object has fallen, and where, from an intermittent impact sound. Humans learn spoken language and visual perception at an early age by being immersed in the world around them. Why can't computers do the same? In this talk, I will describe our ongoing work to develop methodologies for grounding continuous speech signals at the raw waveform level to natural image scenes. I will first present self-supervised models capable of discovering discrete, hierarchical structure (words and sub-word units) in the speech signal. Instead of conventional annotations, these models learn from correspondences between speech sounds and visual patterns such as objects and textures. Next, I will demonstrate how these discrete units can be used as a drop-in replacement for text transcriptions in an image captioning system, enabling us to directly synthesize spoken descriptions of images without the need for text as an intermediate representation. Finally, I will describe our latest work on Transformer-based models of visually-grounded speech. These models significantly outperform the prior state of the art on semantic speech-to-image retrieval tasks, and also learn representations that are useful for a multitude of other speech processing tasks.

Humans learn spoken language and visual perception at an early age by being immersed in the world around them. Why can't computers do the same? In this talk, I will describe our ongoing work to develop methodologies for grounding continuous speech signals at the raw waveform level to natural image scenes. I will first present self-supervised models capable of discovering discrete, hierarchical structure (words and sub-word units) in the speech signal. Instead of conventional annotations, these models learn from correspondences between speech sounds and visual patterns such as objects and textures. Next, I will demonstrate how these discrete units can be used as a drop-in replacement for text transcriptions in an image captioning system, enabling us to directly synthesize spoken descriptions of images without the need for text as an intermediate representation. Finally, I will describe our latest work on Transformer-based models of visually-grounded speech. These models significantly outperform the prior state of the art on semantic speech-to-image retrieval tasks, and also learn representations that are useful for a multitude of other speech processing tasks. While computer vision has made significant progress by "looking" — detecting objects, actions, or people based on their appearance — it often does not listen. Yet cognitive science tells us that perception develops by making use of all our senses without intensive supervision. Towards this goal, in this talk I will present my research on audio-visual learning — We disentangle object sounds from unlabeled video, use audio as an efficient preview for action recognition in untrimmed video, decode the monaural soundtrack into its binaural counterpart by injecting visual spatial information, and use echoes to interact with the environment for spatial image representation learning. Together, these are steps towards multimodal understanding of the visual world, where audio serves as both the semantic and spatial signals. In the end, I will also briefly talk about our latest work on multisensory learning for robotics.

While computer vision has made significant progress by "looking" — detecting objects, actions, or people based on their appearance — it often does not listen. Yet cognitive science tells us that perception develops by making use of all our senses without intensive supervision. Towards this goal, in this talk I will present my research on audio-visual learning — We disentangle object sounds from unlabeled video, use audio as an efficient preview for action recognition in untrimmed video, decode the monaural soundtrack into its binaural counterpart by injecting visual spatial information, and use echoes to interact with the environment for spatial image representation learning. Together, these are steps towards multimodal understanding of the visual world, where audio serves as both the semantic and spatial signals. In the end, I will also briefly talk about our latest work on multisensory learning for robotics. Recurrent neural networks (RNNs) are effective, data-driven models for sequential data, such as audio and speech signals. However, like many deep networks, RNNs are essentially black boxes; though they are effective, their weights and architecture are not directly interpretable by practitioners. A major component of my dissertation research is explaining the success of RNNs and constructing new RNN architectures through the process of "deep unfolding," which can construct and explain deep network architectures using an equivalence to inference in statistical models. Deep unfolding yields principled initializations for training deep networks, provides insight into their effectiveness, and assists with interpretation of what these networks learn.

Recurrent neural networks (RNNs) are effective, data-driven models for sequential data, such as audio and speech signals. However, like many deep networks, RNNs are essentially black boxes; though they are effective, their weights and architecture are not directly interpretable by practitioners. A major component of my dissertation research is explaining the success of RNNs and constructing new RNN architectures through the process of "deep unfolding," which can construct and explain deep network architectures using an equivalence to inference in statistical models. Deep unfolding yields principled initializations for training deep networks, provides insight into their effectiveness, and assists with interpretation of what these networks learn. GPU computing is alive and well! The GPU has allowed researchers to overcome a number of computational barriers in important problem domains. But still, there remain challenges to use a GPU to target more general purpose applications. GPUs achieve impressive speedups when compared to CPUs, since GPUs have a large number of compute cores and high memory bandwidth. Recent GPU performance is approaching 10 teraflops of single precision performance on a single device. In this talk we will discuss current trends with GPUs, including some advanced features that allow them exploit multi-context grains of parallelism. Further, we consider how GPUs can be treated as cloud-based resources, enabling a GPU-enabled server to deliver HPC cloud services by leveraging virtualization and collaborative filtering. Finally, we argue for for new heterogeneous workloads and discuss the role of the Heterogeneous Systems Architecture (HSA), a standard that further supports integration of the CPU and GPU into a common framework. We present a new class of benchmarks specifically tailored to evaluate the benefits of features supported in the new HSA programming model.

GPU computing is alive and well! The GPU has allowed researchers to overcome a number of computational barriers in important problem domains. But still, there remain challenges to use a GPU to target more general purpose applications. GPUs achieve impressive speedups when compared to CPUs, since GPUs have a large number of compute cores and high memory bandwidth. Recent GPU performance is approaching 10 teraflops of single precision performance on a single device. In this talk we will discuss current trends with GPUs, including some advanced features that allow them exploit multi-context grains of parallelism. Further, we consider how GPUs can be treated as cloud-based resources, enabling a GPU-enabled server to deliver HPC cloud services by leveraging virtualization and collaborative filtering. Finally, we argue for for new heterogeneous workloads and discuss the role of the Heterogeneous Systems Architecture (HSA), a standard that further supports integration of the CPU and GPU into a common framework. We present a new class of benchmarks specifically tailored to evaluate the benefits of features supported in the new HSA programming model.