TI2V-Zero: Zero-Shot Image Conditioning for Text-to-Video Diffusion Models

Text-conditioned image-to-video generation.

MERL Researchers: Suhas Lohit, Anoop Cherian, Ye Wang, Toshiaki Koike-Akino, Tim K. Marks (Computer Vision).

Joint work with: Haomiao Ni (Pennsylvania State University), Sharon X. Huang (Pennsylvania State University), Bernhard Egger (Friedrich-Alexander-Universität Erlangen-Nürnberg)

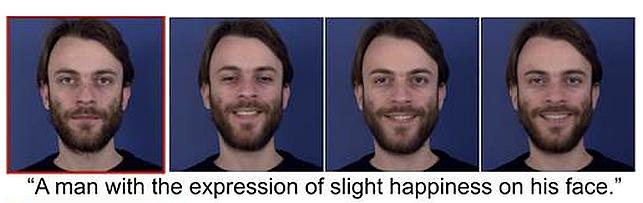

Text-conditioned image-to-video generation (TI2V) aims to synthesize a realistic video starting from a given image (e.g., a woman's photo) and a text description (e.g., "a woman is drinking water."). Existing TI2V frameworks often require costly training on video-text datasets and specific model designs for text and image conditioning. In this paper, we propose TI2V-Zero, a zero-shot, tuning-free method that empowers a pretrained text-to-video (T2V) diffusion model to be conditioned on a provided image, enabling TI2V generation without any optimization, fine-tuning, or introducing external modules. TI2V-Zero is able to generate various types of videos, and the generated videos are more realistic than those generated by competing approaches. Furthermore, we show that TI2V-Zero can seamlessly extend to other tasks such as video infilling and prediction when provided with more images. Its autoregressive design also supports long video generation.

Zero-shot image conditioning of a pretrained T2V diffusion model

To guide video generation with the additional image input, we propose a simple ``Repeat-and-Slide'' strategy that modulates the reverse denoising process, allowing the frozen diffusion model to synthesize a video frame-by-frame starting from the provided image. To ensure temporal continuity, we employ a DDPM inversion strategy to initialize Gaussian noise for each newly synthesized frame and a resampling technique to help preserve visual details.

Video generation results:

1. UCF-101 dataset

2. Open dataset

3. Video prediction example

Software & Data Downloads

MERL Publications

- , "TI2V-Zero: Zero-Shot Image Conditioning for Text-to-Video Diffusion Models", IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2024, pp. 9015-9025.BibTeX TR2024-059 PDF Video Software Presentation

- @inproceedings{Ni2024jun,

- author = {Ni, Haomiao and Egger, Bernhard and Lohit, Suhas and Cherian, Anoop and Wang, Ye and Koike-Akino, Toshiaki and Huang, Sharon X. and Marks, Tim K.},

- title = {{TI2V-Zero: Zero-Shot Image Conditioning for Text-to-Video Diffusion Models}},

- booktitle = {IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

- year = 2024,

- pages = {9015--9025},

- month = jun,

- url = {https://www.merl.com/publications/TR2024-059}

- }