Action Detection in Videos

We describe an approach to detecting actions in video sequences using a recurrent deep network

MERL Researchers: Michael J. Jones, Tim K. Marks (Computer Vision).

Joint work with interns: Bharat Singh (University of Maryland) and Ming Shao (Northeastern University)

Search MERL publications by keyword: Computer Vision, action detection, deep networks

This research attempts to solve the problem of finding particular actions occurring in a video. Much of the past work in this field has looked at the related problem of action recognition. In action recognition, the algorithm is given a short video clip of an action and asked to classify which action is present. In contrast, the problem of action detection requires the algorithm to look through a long video and find the start and stop points of all instances of each known action. We consider action detection to be a more difficult, but much more useful problem to solve in practice.

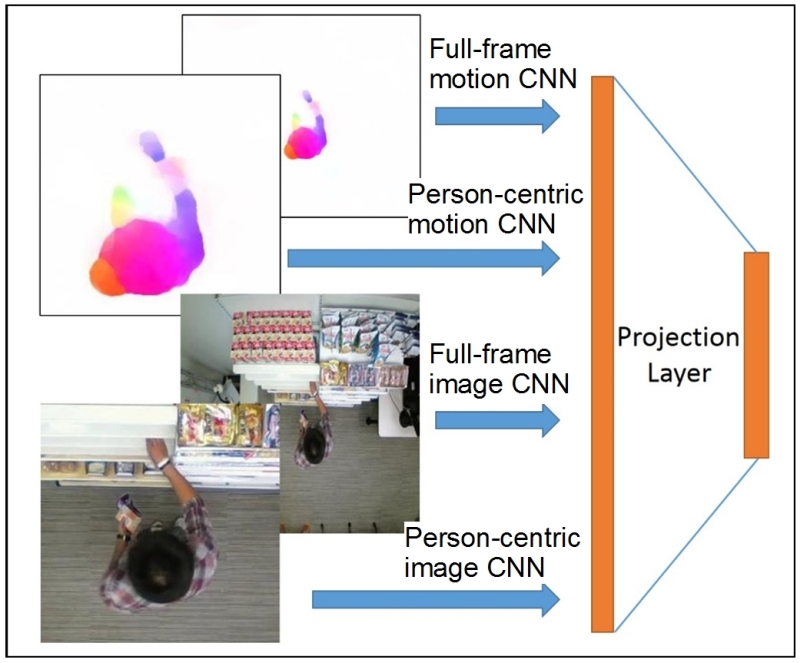

Our approach to action detection uses a deep recurrent neural network. The network consists of four separate "streams" of data: two for person-centric and full-frame (global) video frames, and two for person-centric and full-frame motion vectors. Each of these streams of data is processed by a different convolutional neural network (we use modified VGG networks). The four output feature vectors are combined and used as input to a bi-directional Long Short-Term Memory (LSTM) layer which models long-term temporal dynamics.

We test on two action detection datasets: the MPII Cooking 2 Dataset, and a new MERL Shopping Dataset that we introduce and make available to the community (see MERL Shopping Dataset, below). The results demonstrate that our method significantly outperforms state-of-the-art action detection methods on both datasets

This video shows the output of our action detection algorithm on a test video from the MERL Shopping Dataset. The yellow box in the upper left shows the action that is detected by our method ("MSB-RNN" = Multi-Stream Bi-directional Recurrent Neural Network).

Details of Our Method

Our multi-stream network is illustrated in Figure 1. It consists of four streams. Two of the streams process motion information (pixel trajectories, which are similar to optical flow), one stream for the person-centric (cropped) region and one for the whole frame. The other two streams process image information, one each for the person-centric region and the whole frame. Each stream is processed by a modified VGG network that outputs a feature vector representing the stream. The four feature vectors are concatenated together and projected into a lower-dimensional feature vector.

The lower-dimensional feature vector output by the MSN is then fed into a bi-directional Long Short-Term Memory (LSTM) network, as shown in Figure 2.

More details about our action detection algorithm and results can be found in our CVPR 2016 paper below.

Software & Data Downloads

MERL Shopping Dataset

As part of this research, we collected a new dataset for training and testing action detection algorithms. Our MERL Shopping Dataset consists of 106 videos, each of which is a sequence about 2 minutes long. The videos are from a fixed overhead camera looking down at people shopping in a grocery store setting. Each video contains several instances of the following 5 actions: "Reach To Shelf" (reach hand into shelf), "Retract From Shelf " (retract hand from shelf), "Hand In Shelf" (extended period with hand in the shelf), "Inspect Product" (inspect product while holding it in hand), and "Inspect Shelf" (look at shelf while not touching or reaching for the shelf).

This dataset can be freely downloaded for research purposes from:

ftp.merl.com/pub/tmarks/MERL_Shopping_Dataset/

If you use or refer to this dataset, please cite the referenced paper.

Software & Data Downloads

MERL Publications

- , "A Multi-Stream Bi-Directional Recurrent Neural Network for Fine-Grained Action Detection", IEEE Conference on Computer Vision and Pattern Recognition (CVPR), DOI: 10.1109/CVPR.2016.216, June 2016, pp. 1961-1970.BibTeX TR2016-080 PDF Data

- @inproceedings{Singh2016jun,

- author = {Singh, Bharat and Marks, Tim K. and Jones, Michael J. and Tuzel, C. Oncel and Shao, Ming},

- title = {{A Multi-Stream Bi-Directional Recurrent Neural Network for Fine-Grained Action Detection}},

- booktitle = {IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

- year = 2016,

- pages = {1961--1970},

- month = jun,

- doi = {10.1109/CVPR.2016.216},

- url = {https://www.merl.com/publications/TR2016-080}

- }