Private, Secure, and Reliable Artificial Intelligence

Advancing Privacy, Security, and Trust in Next-Generation AI Systems

MERL Researchers: Ye Wang, Jing Liu, Toshiaki Koike-Akino, Kieran Parsons.

Joint work with: Zhuohang Li (Vanderbilt University), Andrew Lowy (CISPA Helmholtz Center for Information Security), Bradley Malin (Vanderbilt University), Tsunato Nakai (Mitsubishi Electric Corporation),

Kento Oonishi (Mitsubishi Electric Corporation), Takuya Higashi (Mitsubishi Electric Corporation), Md Rafi Ur Rashid (Pennsylvania State University), Shagufta Mehnaz (Pennsylvania State University),

Ryo Hase (Mitsubishi Electric Corporation), Ashley Lewis (The Ohio State University), Michael White (The Ohio State University)

At MERL, we are advancing the frontiers of private, secure, and reliable machine learning. Our recent research explores vulnerabilities and defense methods for foundation models and generative AI systems, contributing to the development of safer and more responsible AI technologies.

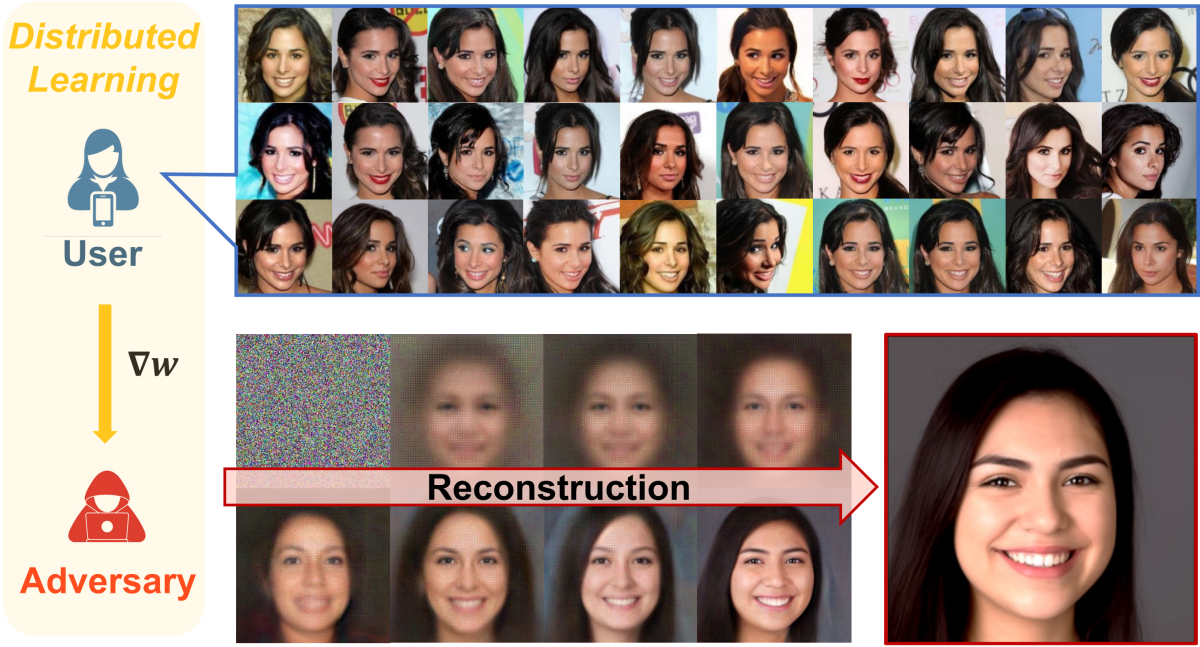

Inference Privacy Risks from Distributed Learning Gradients

We investigated a new privacy-attack scenario in federated and distributed learning: user-level gradient inversion. Unlike prior work that reconstructs individual samples from gradients, we aim to recover user-specific semantic information, such as representative facial features. We introduce a method that leverages a denoising diffusion model as a powerful image prior, enabling realistic recovery of user attributes even in the challenging scenario when gradients are aggregated over large batches. Our experiments on face datasets demonstrate that our diffusion-based inversion successfully reconstructs visually realistic and privacy-sensitive user characteristics, revealing a broader attack surface beyond sample-level leakage.

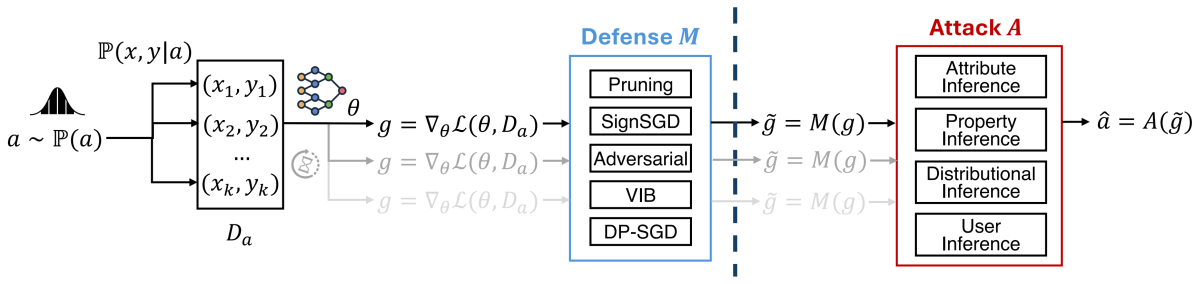

We developed a unified analytical framework to quantify inference privacy risks arising from gradient sharing in distributed learning. Our formulation models various adversarial goals-including attribute, property, and distribution inference-within a common game-theoretic structure, allowing systematic comparison across attacks and defenses. Through extensive experiments, we reveal that simple gradient aggregation (e.g., via larger batch sizes) does not eliminate privacy leakage. We further evaluate several defenses, including gradient pruning, adversarial perturbation, variational information bottlenecking, and differential privacy. To estimate worst-case leakage, we introduce an auditing method using adversarial "canary" records. Overall, our study provides a holistic understanding of gradient-based inference risks and effective mitigation strategies.

Data Privacy for Model Training and Fine-Tuning

We study why differential privacy (DP) mechanisms with large ε values-often used in practice-still appear effective against membership inference attacks (MIAs) despite offering weak theoretical guarantees. To explain this observation, we propose a new privacy metric called Practical Membership Privacy (PMP), which offers strong guarantees against an adversary with realistically modelled uncertainties. By analyzing common DP techniques, the Gaussian and exponential mechanisms, we show that even in cases of large ε for DP, the PMP risk may be substantially small, which offer strong practical privacy guarantees. Our findings elucidate the gap between DP's worst-case theory and its real-world defensive performance.

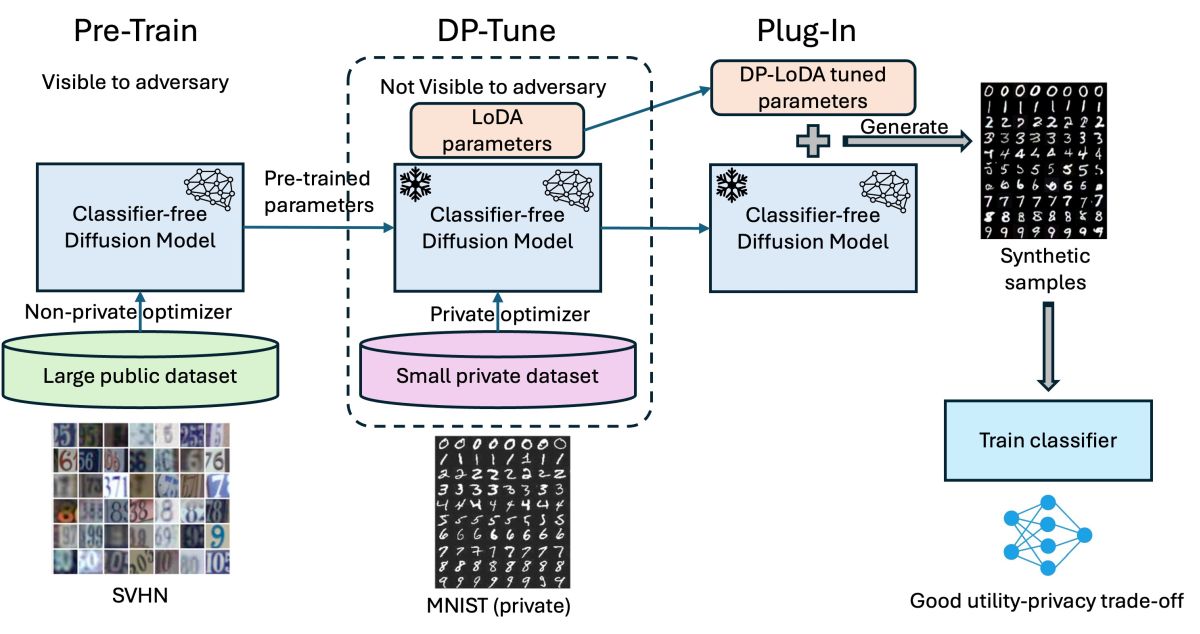

We propose combining Parameter-Efficient Fine-Tuning (PEFT) techniques with Differentially Private Stochastic Gradient Descent (DP-SGD) to enable effective, private adaptation of public foundation models on potentially sensitive data. Specifically, we incorporate Low-Dimensional Adaptation (LoDA) modules to attain more efficient utilization of the privacy budget during differentially private fine-tuning of diffusion models. Our experiments demonstrate that these models can be leveraged to privately generate synthetic data for training downstream classifiers effectively, while achieving an advantageous privacy-accuracy tradeoff.

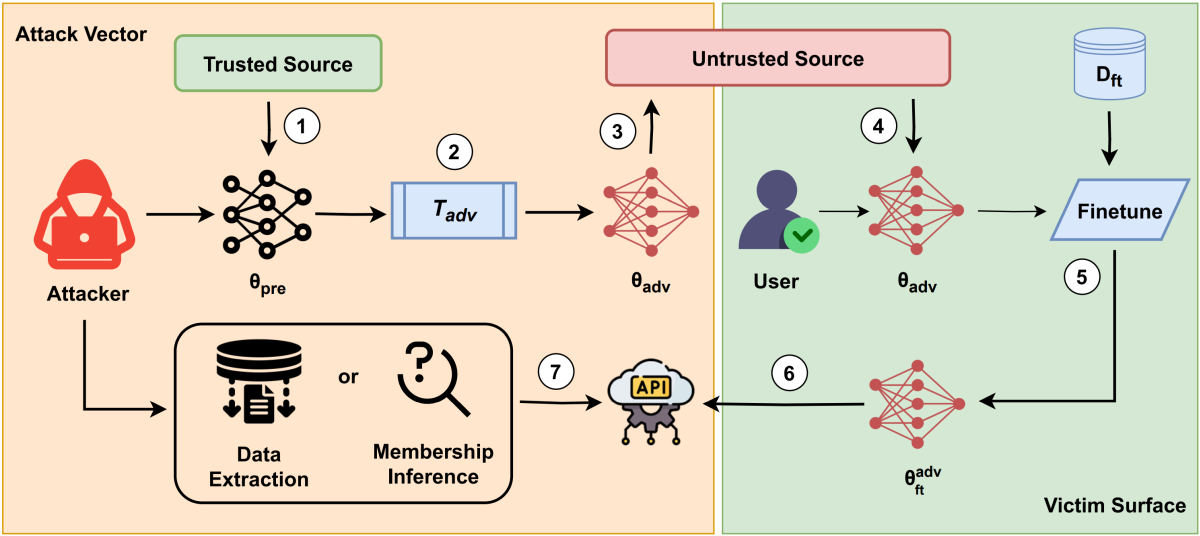

We uncover a novel threat vector that weaponizes machine-unlearning. A maliciously modified pretrained model can be crafted to leak private information during downstream fine-tuning-while still appearing to perform normally. By embedding unlearning triggers within the model, it becomes biased to expose sensitive details (such as membership or verbatim text) once fine-tuned on private data. Our experiments demonstrate that these poisoned models can pass standard utility tests, making them stealthy and particularly dangerous. These findings underscore the urgent need for rigorous verification of pretrained model integrity before deploying them on sensitive datasets.

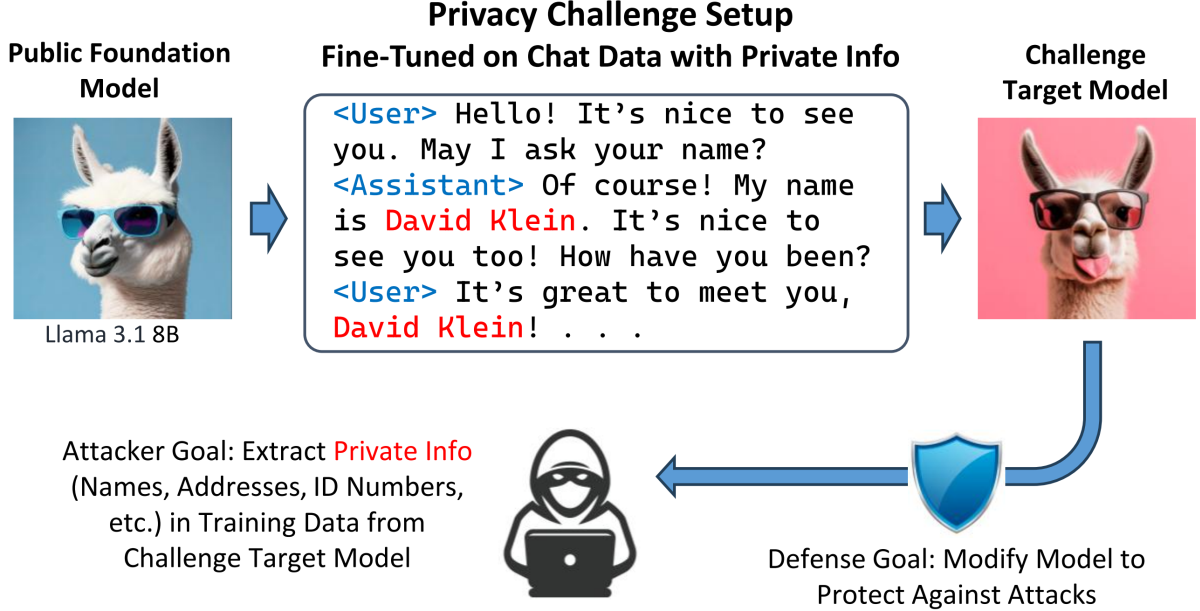

NeurIPS 2024 Large Language Model Privacy Challenge

In our NeurIPS 2024 LLM Privacy Challenge Red Team submission, we designed a joint-context attack that enhances personally identifiable information (PII) extraction from large language models. Our method performs a beam-search process that jointly considers multiple contexts that precede the target PII. We developed scoring strategies to favor plausible PII tokens while filtering improbable continuations to improve the accuracy of PII extraction. In competition, our approach achieved an 18.99% Attack Success Rate (ASR), ranked 4th on the public leaderboard, and won the Special Award for Practical Attack.

As part of the NeurIPS 2024 LLM Privacy Challenge Blue Team track, we proposed a simple yet effective defense to mitigate PII extraction. Our defense combines two key strategies: (i) data unlearning to remove PII from fine-tuning data, and (ii) a system prompt guard to suppress sensitive data continuations during inference. Together, these mechanisms significantly reduced the attack success rate of the baseline attack from 3.91% to 0.06%, without degrading model utility, won the 3rd place award. Our results highlight that careful data unlearning and prompt-based defenses can offer strong, lightweight privacy protection in LLM fine-tuning.

Secure and Reliable Language Models

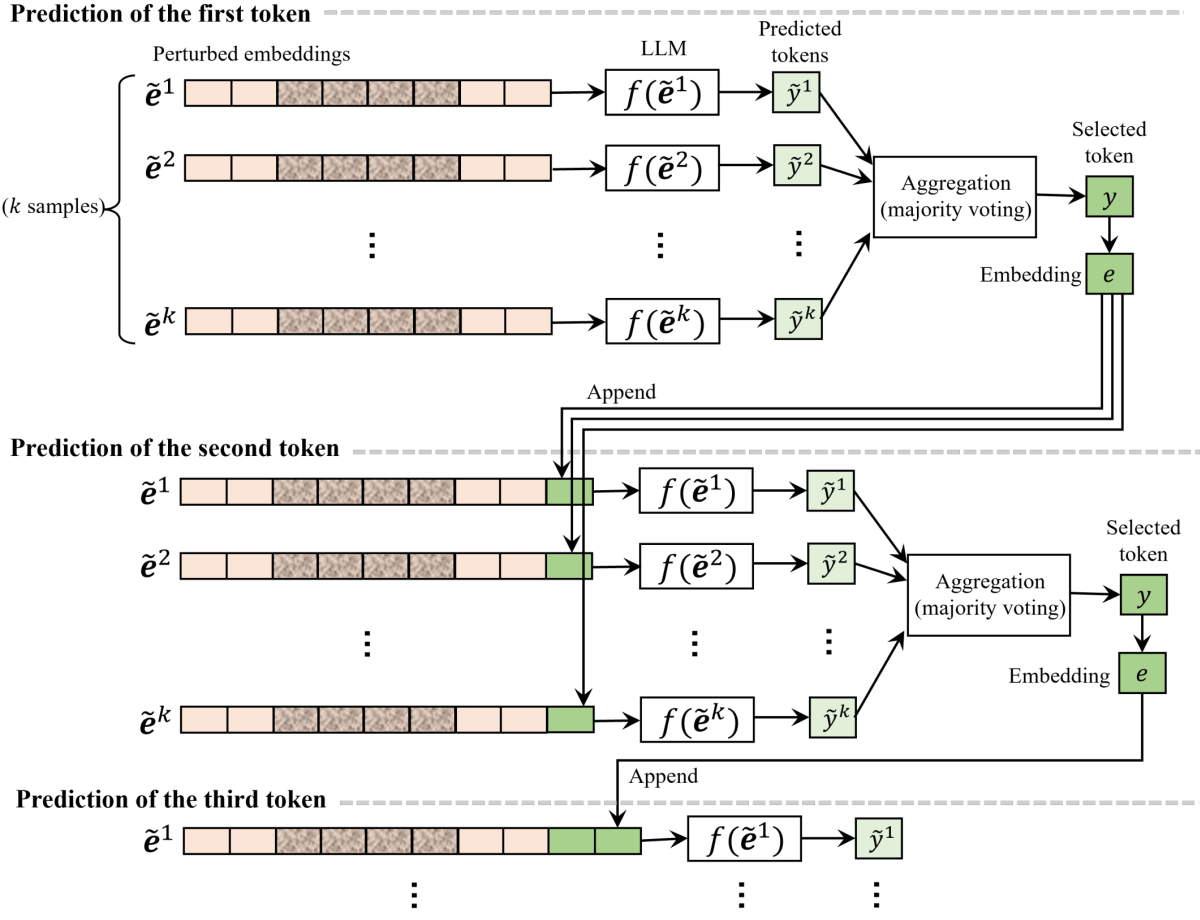

We address the vulnerability of large language models to adversarial or "jailbreak" prompts by introducing RESTA (Randomized Embedding Smoothing and Token Aggregation). Our technique perturbs token embeddings with small random noise and aggregates token selection over multiple randomized samples. This process maintains semantic coherence while reducing susceptibility to adversarial manipulations. Experiments across several attacks and models show that our method achieves a superior robustness-utility tradeoff compared to existing inference-time defenses. We demonstrate that embedding-level smoothing is a practical and scalable approach to enhance LLM safety.

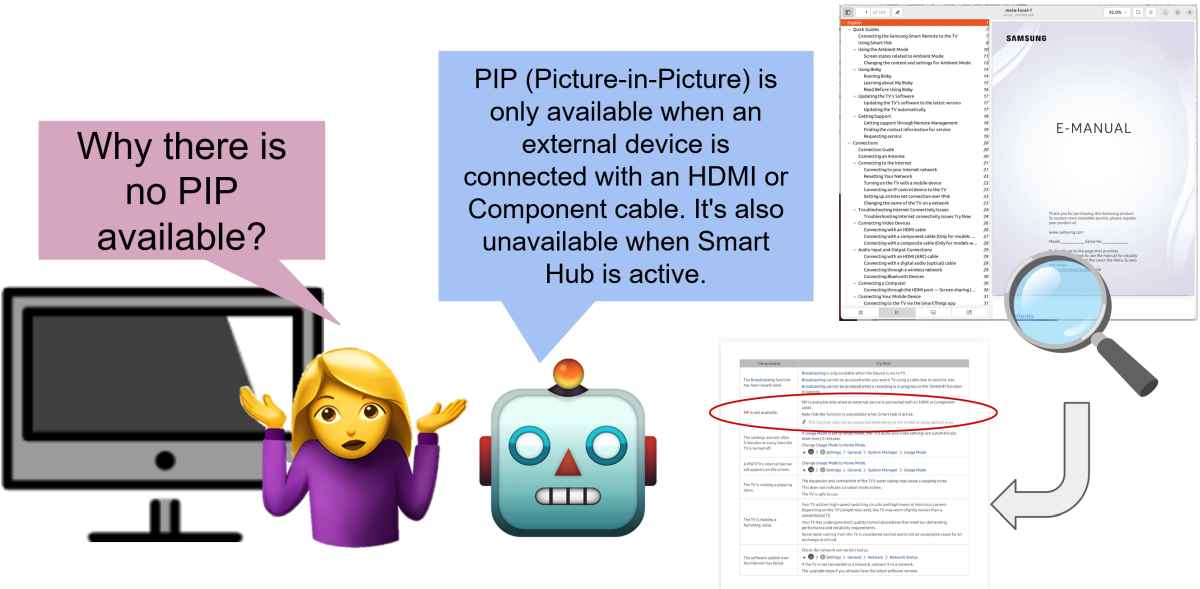

We tackle the challenge of developing compact, low-cost QA agents that maintain accuracy and reduce hallucinations. Our system uses a retrieval-augmented question-answering pipeline built on a product manual dataset, and we compare two training regimes: knowledge distillation from a stronger LLM (e.g., GPT-4o) and self-training on the model's own synthetic outputs. We show that training with synthetic data generated by LLMs can outperform crowdsourced data in reducing hallucination, and that self-training yields comparable gains to distillation. Further, we implement a contextual fallback mechanism whereby the agent responds "I don't know" when faced with unanswerable queries or retrieval failures. Our results demonstrate that small QA models can achieve high reliability using efficient training strategies and synthetic data, thereby reducing reliance on proprietary models and large human annotation budgets.

Videos

Software & Data Downloads

MERL Publications

- , "Exploring User-level Gradient Inversion with a Diffusion Prior", International Workshop on Federated Learning in the Age of Foundation Models in Conjunction with NeurIPS, December 2023.BibTeX TR2023-149 PDF

- @inproceedings{Li2023dec,

- author = {Li, Zhuohang and Lowy, Andrew and Liu, Jing and Koike-Akino, Toshiaki and Malin, Bradley and Parsons, Kieran and Wang, Ye},

- title = {{Exploring User-level Gradient Inversion with a Diffusion Prior}},

- booktitle = {International Workshop on Federated Learning in the Age of Foundation Models in Conjunction with NeurIPS},

- year = 2023,

- month = dec,

- url = {https://www.merl.com/publications/TR2023-149}

- }

- , "Why Does Differential Privacy with Large ε Defend Against Practical Membership Inference Attacks?", AAAI Workshop on Privacy-Preserving Artificial Intelligence, February 2024.BibTeX TR2024-009 PDF

- @inproceedings{Lowy2024feb2,

- author = {Lowy, Andrew and Li, Zhuohang and Liu, Jing and Koike-Akino, Toshiaki and Parsons, Kieran and Wang, Ye},

- title = {{Why Does Differential Privacy with Large ε Defend Against Practical Membership Inference Attacks?}},

- booktitle = {AAAI Workshop on Privacy-Preserving Artificial Intelligence},

- year = 2024,

- month = feb,

- url = {https://www.merl.com/publications/TR2024-009}

- }

- , "Efficient Differentially Private Fine-Tuning of Diffusion Models", International Conference on Machine Learning (ICML) workshop (Next Generation of AI Safety), July 2024.BibTeX TR2024-104 PDF Presentation

- @inproceedings{Liu2024jul,

- author = {Liu, Jing and Lowy, Andrew and Koike-Akino, Toshiaki and Parsons, Kieran and Wang, Ye},

- title = {{Efficient Differentially Private Fine-Tuning of Diffusion Models}},

- booktitle = {International Conference on Machine Learning (ICML) workshop (Next Generation of AI Safety)},

- year = 2024,

- month = jul,

- url = {https://www.merl.com/publications/TR2024-104}

- }

- , "Analyzing Inference Privacy Risks Through Gradients In Machine Learning", ACM Conference on Computer and Communications Security (CCS), DOI: 10.1145/3658644.3690304, October 2024, pp. 3466-3480.BibTeX TR2024-141 PDF

- @inproceedings{Li2024oct,

- author = {Li, Zhuohang and Lowy, Andrew and Liu, Jing and Koike-Akino, Toshiaki and Parsons, Kieran and Malin, Bradley and Wang, Ye},

- title = {{Analyzing Inference Privacy Risks Through Gradients In Machine Learning}},

- booktitle = {Proceedings of the 2024 on ACM SIGSAC Conference on Computer and Communications Security},

- year = 2024,

- pages = {3466--3480},

- month = oct,

- publisher = {Association for Computing Machinery},

- doi = {10.1145/3658644.3690304},

- isbn = {9798400706363},

- url = {https://www.merl.com/publications/TR2024-141}

- }

- , "MEL-PETs Joint-Context Attack for the NeurIPS 2024 LLM Privacy Challenge Red Team Track", LLM Privacy Challenge at Neural Information Processing Systems (NeurIPS), December 2024.BibTeX TR2024-165 PDF Video Presentation

- @inproceedings{Wang2024dec2,

- author = {Wang, Ye and Nakai, Tsunato and Liu, Jing and Koike-Akino, Toshiaki and Oonishi, Kento and Higashi, Takuya},

- title = {{MEL-PETs Joint-Context Attack for the NeurIPS 2024 LLM Privacy Challenge Red Team Track}},

- booktitle = {LLM Privacy Challenge at Neural Information Processing Systems (NeurIPS)},

- year = 2024,

- month = dec,

- url = {https://www.merl.com/publications/TR2024-165}

- }

- , "MEL-PETs Defense for the NeurIPS 2024 LLM Privacy Challenge Blue Team Track", LLM Privacy Challenge at Neural Information Processing Systems (NeurIPS) 2024, December 2024.BibTeX TR2024-166 PDF Video Presentation

- @inproceedings{Liu2024dec,

- author = {Liu, Jing and Wang, Ye and Koike-Akino, Toshiaki and Nakai, Tsunato and Oonishi, Kento and Higashi, Takuya},

- title = {{MEL-PETs Defense for the NeurIPS 2024 LLM Privacy Challenge Blue Team Track}},

- booktitle = {LLM Privacy Challenge at Neural Information Processing Systems (NeurIPS) 2024},

- year = 2024,

- month = dec,

- url = {https://www.merl.com/publications/TR2024-166}

- }

- , "Forget to Flourish: Leveraging Machine-Unlearning on Pretrained Language Models for Privacy Leakage", Red Teaming GenAI Workshop at Neural Information Processing Systems (NeurIPS), December 2024.BibTeX TR2024-168 PDF

- @inproceedings{Rashid2024dec,

- author = {Rashid, Md Rafi Ur and Liu, Jing and Koike-Akino, Toshiaki and Mehnaz, Shagufta and Wang, Ye},

- title = {{Forget to Flourish: Leveraging Machine-Unlearning on Pretrained Language Models for Privacy Leakage}},

- booktitle = {Red Teaming GenAI Workshop at Neural Information Processing Systems (NeurIPS)},

- year = 2024,

- month = dec,

- publisher = {OpenReview},

- url = {https://www.merl.com/publications/TR2024-168}

- }

- , "Smoothed Embeddings for Robust Language Models", Safe Generative AI Workshop at Advances in Neural Information Processing Systems (NeurIPS), December 2024.BibTeX TR2024-170 PDF Presentation

- @inproceedings{Ryo2024dec,

- author = {Ryo, Hase and Rashid, Md Rafi Ur and Lewis, Ashley and Liu, Jing and Koike-Akino, Toshiaki and Parsons, Kieran and Wang, Ye},

- title = {{Smoothed Embeddings for Robust Language Models}},

- booktitle = {Safe Generative AI Workshop at Advances in Neural Information Processing Systems (NeurIPS)},

- year = 2024,

- month = dec,

- publisher = {OpenReview},

- url = {https://www.merl.com/publications/TR2024-170}

- }

- , "Forget to Flourish: Leveraging Machine-Unlearning on Pretrained Language Models for Privacy Leakage", AAAI Conference on Artificial Intelligence, Toby Walsh, Julie Shah, Zico Kolter, Eds., DOI: 10.1609/aaai.v39i19.34218, February 2025, pp. 20139-20147.BibTeX TR2025-017 PDF

- @inproceedings{Rashid2025feb,

- author = {Rashid, Md Rafi Ur and Liu, Jing and Koike-Akino, Toshiaki and Wang, Ye and Mehnaz, Shagufta},

- title = {{Forget to Flourish: Leveraging Machine-Unlearning on Pretrained Language Models for Privacy Leakage}},

- booktitle = {Proceedings of the AAAI Conference on Artificial Intelligence},

- year = 2025,

- editor = {Toby Walsh, Julie Shah, Zico Kolter},

- pages = {20139--20147},

- month = feb,

- publisher = {Association for the Advancement of Artificial Intelligence (AAAI)},

- doi = {10.1609/aaai.v39i19.34218},

- issn = {2374-3468},

- isbn = {978-1-57735-897-8},

- url = {https://www.merl.com/publications/TR2025-017}

- }

- , "Winning Big with Small Models: Knowledge Distillation vs. Self-Training for Reducing Hallucination in Product QA Agents", ACL 2025 workshop on Generation, Evaluation & Metrics (GEM), Ofir Arviv, Miruna Clinciu, Kaustubh Dhole, Rotem Dror, Sebastian Gehrmann, Eliya Habba, Itay Itzhak, Simon Mille, Yotam Perlitz, Enrico Santus, João Sedoc, Michal Shmueli Scheuer, Gabriel Stanovsky, Oyvind Tafjord, Eds., July 2025, pp. 705-727.BibTeX TR2025-114 PDF

- @inproceedings{Lewis2025jul2,

- author = {Lewis, Ashley and White, Michael and Liu, Jing and Koike-Akino, Toshiaki and Parsons, Kieran and Wang, Ye},

- title = {{Winning Big with Small Models: Knowledge Distillation vs. Self-Training for Reducing Hallucination in Product QA Agents}},

- booktitle = {ACL 2025 workshop on Generation, Evaluation \& Metrics (GEM)},

- year = 2025,

- editor = {Ofir Arviv, Miruna Clinciu, Kaustubh Dhole, Rotem Dror, Sebastian Gehrmann, Eliya Habba, Itay Itzhak, Simon Mille, Yotam Perlitz, Enrico Santus, João Sedoc, Michal Shmueli Scheuer, Gabriel Stanovsky, Oyvind Tafjord},

- pages = {705--727},

- month = jul,

- publisher = {Association for Computational Linguistics},

- isbn = {979-8-89176-261-9},

- url = {https://www.merl.com/publications/TR2025-114}

- }