- Date & Time: Wednesday, February 26, 2025; 11:00 AM

Speaker: Petar Veličković, Google DeepMind

MERL Host: Anoop Cherian

Research Areas: Artificial Intelligence, Computer Vision, Machine Learning

Abstract  Recent years have seen a significant surge in complex AI systems for competitive programming, capable of performing at admirable levels against human competitors. While steady progress has been made, the highest percentiles still remain out of reach for these methods on standard competition platforms such as Codeforces. In this talk, I will describe and dive into our recent work, where we focussed on combinatorial competitive programming. In combinatorial challenges, the target is to find as-good-as-possible solutions to otherwise computationally intractable problems, over specific given inputs. We hypothesise that this scenario offers a unique testbed for human-AI synergy, as human programmers can write a backbone of a heuristic solution, after which AI can be used to optimise the scoring function used by the heuristic. We deploy our approach on previous iterations of Hash Code, a global team programming competition inspired by NP-hard software engineering problems at Google, and we leverage FunSearch to evolve our scoring functions. Our evolved solutions significantly improve the attained scores from their baseline, successfully breaking into the top percentile on all previous Hash Code online qualification rounds, and outperforming the top human teams on several. To the best of our knowledge, this is the first known AI-assisted top-tier result in competitive programming.

Recent years have seen a significant surge in complex AI systems for competitive programming, capable of performing at admirable levels against human competitors. While steady progress has been made, the highest percentiles still remain out of reach for these methods on standard competition platforms such as Codeforces. In this talk, I will describe and dive into our recent work, where we focussed on combinatorial competitive programming. In combinatorial challenges, the target is to find as-good-as-possible solutions to otherwise computationally intractable problems, over specific given inputs. We hypothesise that this scenario offers a unique testbed for human-AI synergy, as human programmers can write a backbone of a heuristic solution, after which AI can be used to optimise the scoring function used by the heuristic. We deploy our approach on previous iterations of Hash Code, a global team programming competition inspired by NP-hard software engineering problems at Google, and we leverage FunSearch to evolve our scoring functions. Our evolved solutions significantly improve the attained scores from their baseline, successfully breaking into the top percentile on all previous Hash Code online qualification rounds, and outperforming the top human teams on several. To the best of our knowledge, this is the first known AI-assisted top-tier result in competitive programming.

-

- Date & Time: Wednesday, January 29, 2025; 1:00 PM

Speaker: David Lindell, University of Toronto

MERL Host: Joshua Rapp

Research Areas: Computational Sensing, Computer Vision, Signal Processing

Abstract  The observed timescales of the universe span from the exasecond scale (~1e18 seconds) down to the zeptosecond scale (~1e-21 seconds). While specialized imaging systems can capture narrow slices of this temporal spectrum in the ultra-fast regime (e.g., nanoseconds to picoseconds; 1e-9 to 1e-12 s), they cannot simultaneously capture both slow (> 1 second) and ultra-fast events (< 1 nanosecond). Further, ultra-fast imaging systems are conventionally limited to single-viewpoint capture, hindering 3D visualization at ultra-fast timescales. In this talk, I discuss (1) new computational algorithms that turn a single-photon detector into an "ultra-wideband" imaging system that captures events from seconds to picoseconds; and (2) a method for neural rendering using multi-viewpoint, ultra-fast videos captured using single-photon detectors. The latter approach enables rendering videos of propagating light from novel viewpoints, observation of viewpoint-dependent changes in light transport predicted by Einstein, recovery of material properties, and accurate 3D reconstruction from multiply scattered light. Finally, I discuss future directions in ultra-wideband imaging.

The observed timescales of the universe span from the exasecond scale (~1e18 seconds) down to the zeptosecond scale (~1e-21 seconds). While specialized imaging systems can capture narrow slices of this temporal spectrum in the ultra-fast regime (e.g., nanoseconds to picoseconds; 1e-9 to 1e-12 s), they cannot simultaneously capture both slow (> 1 second) and ultra-fast events (< 1 nanosecond). Further, ultra-fast imaging systems are conventionally limited to single-viewpoint capture, hindering 3D visualization at ultra-fast timescales. In this talk, I discuss (1) new computational algorithms that turn a single-photon detector into an "ultra-wideband" imaging system that captures events from seconds to picoseconds; and (2) a method for neural rendering using multi-viewpoint, ultra-fast videos captured using single-photon detectors. The latter approach enables rendering videos of propagating light from novel viewpoints, observation of viewpoint-dependent changes in light transport predicted by Einstein, recovery of material properties, and accurate 3D reconstruction from multiply scattered light. Finally, I discuss future directions in ultra-wideband imaging.

-

- Date & Time: Wednesday, October 2, 2024; 1:00 PM

Speaker: Zhaojian Li, Mivchigan State University

MERL Host: Yebin Wang

Research Areas: Artificial Intelligence, Computer Vision, Control, Robotics

Abstract  Harvesting labor is the single largest cost in apple production in the U.S. Surging cost and growing shortage of labor has forced the apple industry to seek automated harvesting solutions. Despite considerable progress in recent years, the existing robotic harvesting systems still fall short of performance expectations, lacking robustness and proving inefficient or overly complex for practical commercial deployment. In this talk, I will present the development and evaluation of a new dual-arm robotic apple harvesting system. This work is a result of a continuous collaboration between Michigan State University and U.S. Department of Agriculture.

Harvesting labor is the single largest cost in apple production in the U.S. Surging cost and growing shortage of labor has forced the apple industry to seek automated harvesting solutions. Despite considerable progress in recent years, the existing robotic harvesting systems still fall short of performance expectations, lacking robustness and proving inefficient or overly complex for practical commercial deployment. In this talk, I will present the development and evaluation of a new dual-arm robotic apple harvesting system. This work is a result of a continuous collaboration between Michigan State University and U.S. Department of Agriculture.

-

- Date & Time: Tuesday, February 13, 2024; 1:00 PM

Speaker: Melanie Mitchell, Santa Fe Institute

MERL Host: Suhas Anand Lohit

Research Areas: Artificial Intelligence, Computer Vision, Machine Learning, Human-Computer Interaction

Abstract  I will survey a current, heated debate in the AI research community on whether large pre-trained language models can be said to "understand" language -- and the physical and social situations language encodes -- in any important sense. I will describe arguments that have been made for and against such understanding, and, more generally, will discuss what methods can be used to fairly evaluate understanding and intelligence in AI systems. I will conclude with key questions for the broader sciences of intelligence that have arisen in light of these discussions.

I will survey a current, heated debate in the AI research community on whether large pre-trained language models can be said to "understand" language -- and the physical and social situations language encodes -- in any important sense. I will describe arguments that have been made for and against such understanding, and, more generally, will discuss what methods can be used to fairly evaluate understanding and intelligence in AI systems. I will conclude with key questions for the broader sciences of intelligence that have arisen in light of these discussions.

-

- Date & Time: Tuesday, November 28, 2023; 12:00 PM

Speaker: Kristina Monakhova, MIT and Cornell

MERL Host: Joshua Rapp

Research Areas: Computational Sensing, Computer Vision, Machine Learning, Signal Processing

Abstract  Imaging in low light settings is extremely challenging due to low photon counts, both in photography and in microscopy. In photography, imaging under low light, high gain settings often results in highly structured, non-Gaussian sensor noise that’s hard to characterize or denoise. In this talk, we address this by developing a GAN-tuned physics-based noise model to more accurately represent camera noise at the lowest light, and highest gain settings. Using this noise model, we train a video denoiser using synthetic data and demonstrate photorealistic videography at starlight (submillilux levels of illumination) for the first time.

Imaging in low light settings is extremely challenging due to low photon counts, both in photography and in microscopy. In photography, imaging under low light, high gain settings often results in highly structured, non-Gaussian sensor noise that’s hard to characterize or denoise. In this talk, we address this by developing a GAN-tuned physics-based noise model to more accurately represent camera noise at the lowest light, and highest gain settings. Using this noise model, we train a video denoiser using synthetic data and demonstrate photorealistic videography at starlight (submillilux levels of illumination) for the first time.

For multiphoton microscopy, which is a form a scanning microscopy, there’s a trade-off between field of view, phototoxicity, acquisition time, and image quality, often resulting in noisy measurements. While deep learning-based methods have shown compelling denoising performance, can we trust these methods enough for critical scientific and medical applications? In the second part of this talk, I’ll introduce a learned, distribution-free uncertainty quantification technique that can both denoise and predict pixel-wise uncertainty to gauge how much we can trust our denoiser’s performance. Furthermore, we propose to leverage this learned, pixel-wise uncertainty to drive an adaptive acquisition technique that rescans only the most uncertain regions of a sample. With our sample and algorithm-informed adaptive acquisition, we demonstrate a 120X improvement in total scanning time and total light dose for multiphoton microscopy, while successfully recovering fine structures within the sample.

-

- Date & Time: Tuesday, October 31, 2023; 2:00 PM

Speaker: Tanmay Gupta, Allen Institute for Artificial Intelligence

MERL Host: Moitreya Chatterjee

Research Areas: Artificial Intelligence, Computer Vision, Machine Learning

Abstract  Building General Purpose Vision Systems (GPVs) that can perform a huge variety of tasks has been a long-standing goal for the computer vision community. However, end-to-end training of these systems to handle different modalities and tasks has proven to be extremely challenging. In this talk, I will describe a lucrative neuro-symbolic alternative to the common end-to-end learning paradigm called Visual Programming. Visual Programming is a general framework that leverages the code-generation abilities of LLMs, existing neural models, and non-differentiable programs to enable powerful applications. Some of these applications continue to remain elusive for the current generation of end-to-end trained GPVs.

Building General Purpose Vision Systems (GPVs) that can perform a huge variety of tasks has been a long-standing goal for the computer vision community. However, end-to-end training of these systems to handle different modalities and tasks has proven to be extremely challenging. In this talk, I will describe a lucrative neuro-symbolic alternative to the common end-to-end learning paradigm called Visual Programming. Visual Programming is a general framework that leverages the code-generation abilities of LLMs, existing neural models, and non-differentiable programs to enable powerful applications. Some of these applications continue to remain elusive for the current generation of end-to-end trained GPVs.

-

- Date & Time: Tuesday, March 14, 2023; 1:00 PM

Speaker: Suraj Srinivas, Harvard University

MERL Host: Suhas Anand Lohit

Research Areas: Artificial Intelligence, Computer Vision, Machine Learning

Abstract  In this talk, I will discuss our recent research on understanding post-hoc interpretability. I will begin by introducing a characterization of post-hoc interpretability methods as local function approximators, and the implications of this viewpoint, including a no-free-lunch theorem for explanations. Next, we shall challenge the assumption that post-hoc explanations provide information about a model's discriminative capabilities p(y|x) and instead demonstrate that many common methods instead rely on a conditional generative model p(x|y). This observation underscores the importance of being cautious when using such methods in practice. Finally, I will propose to resolve this via regularization of model structure, specifically by training low curvature neural networks, resulting in improved model robustness and stable gradients.

In this talk, I will discuss our recent research on understanding post-hoc interpretability. I will begin by introducing a characterization of post-hoc interpretability methods as local function approximators, and the implications of this viewpoint, including a no-free-lunch theorem for explanations. Next, we shall challenge the assumption that post-hoc explanations provide information about a model's discriminative capabilities p(y|x) and instead demonstrate that many common methods instead rely on a conditional generative model p(x|y). This observation underscores the importance of being cautious when using such methods in practice. Finally, I will propose to resolve this via regularization of model structure, specifically by training low curvature neural networks, resulting in improved model robustness and stable gradients.

-

- Date & Time: Monday, December 12, 2022; 1:00pm-5:30pm ET

Location: Mitsubishi Electric Research Laboratories (MERL)/Virtual

Research Areas: Applied Physics, Artificial Intelligence, Communications, Computational Sensing, Computer Vision, Control, Data Analytics, Dynamical Systems, Electric Systems, Electronic and Photonic Devices, Machine Learning, Multi-Physical Modeling, Optimization, Robotics, Signal Processing, Speech & Audio, Digital Video

Brief - Join MERL's virtual open house on December 12th, 2022! Featuring a keynote, live sessions, research area booths, and opportunities to interact with our research team. Discover who we are and what we do, and learn about internship and employment opportunities.

-

- Date & Time: Tuesday, November 1, 2022; 1:00 PM

Speaker: Jiajun Wu, Stanford University

MERL Host: Anoop Cherian

Research Areas: Artificial Intelligence, Computer Vision, Machine Learning

Abstract  The visual world has its inherent structure: scenes are made of multiple identical objects; different objects may have the same color or material, with a regular layout; each object can be symmetric and have repetitive parts. How can we infer, represent, and use such structure from raw data, without hampering the expressiveness of neural networks? In this talk, I will demonstrate that such structure, or code, can be learned from natural supervision. Here, natural supervision can be from pixels, where neuro-symbolic methods automatically discover repetitive parts and objects for scene synthesis. It can also be from objects, where humans during fabrication introduce priors that can be leveraged by machines to infer regular intrinsics such as texture and material. When solving these problems, structured representations and neural nets play complementary roles: it is more data-efficient to learn with structured representations, and they generalize better to new scenarios with robustly captured high-level information; neural nets effectively extract complex, low-level features from cluttered and noisy visual data.

The visual world has its inherent structure: scenes are made of multiple identical objects; different objects may have the same color or material, with a regular layout; each object can be symmetric and have repetitive parts. How can we infer, represent, and use such structure from raw data, without hampering the expressiveness of neural networks? In this talk, I will demonstrate that such structure, or code, can be learned from natural supervision. Here, natural supervision can be from pixels, where neuro-symbolic methods automatically discover repetitive parts and objects for scene synthesis. It can also be from objects, where humans during fabrication introduce priors that can be leveraged by machines to infer regular intrinsics such as texture and material. When solving these problems, structured representations and neural nets play complementary roles: it is more data-efficient to learn with structured representations, and they generalize better to new scenarios with robustly captured high-level information; neural nets effectively extract complex, low-level features from cluttered and noisy visual data.

-

- Date: Thursday, October 6, 2022

Location: Kendall Square, Cambridge, MA

MERL Contacts: Anoop Cherian; Jonathan Le Roux

Research Areas: Artificial Intelligence, Computer Vision, Machine Learning, Speech & Audio

Brief - SANE 2022, a one-day event gathering researchers and students in speech and audio from the Northeast of the American continent, was held on Thursday October 6, 2022 in Kendall Square, Cambridge, MA.

It was the 9th edition in the SANE series of workshops, which started in 2012 and was held every year alternately in Boston and New York until 2019. Since the first edition, the audience has grown to a record 200 participants and 45 posters in 2019. After a 2-year hiatus due to the pandemic, SANE returned with an in-person gathering of 140 students and researchers.

SANE 2022 featured invited talks by seven leading researchers from the Northeast: Rupal Patel (Northeastern/VocaliD), Wei-Ning Hsu (Meta FAIR), Scott Wisdom (Google), Tara Sainath (Google), Shinji Watanabe (CMU), Anoop Cherian (MERL), and Chuang Gan (UMass Amherst/MIT-IBM Watson AI Lab). It also featured a lively poster session with 29 posters.

SANE 2022 was co-organized by Jonathan Le Roux (MERL), Arnab Ghoshal (Apple), John Hershey (Google), and Shinji Watanabe (CMU). SANE remained a free event thanks to generous sponsorship by Bose, Google, MERL, and Microsoft.

Slides and videos of the talks will be released on the SANE workshop website.

-

- Date & Time: Tuesday, September 6, 2022; 12:00 PM EDT

Speaker: Chuang Gan, UMass Amherst & MIT-IBM Watson AI Lab

MERL Host: Jonathan Le Roux

Research Areas: Artificial Intelligence, Computer Vision, Machine Learning, Speech & Audio

Abstract  Human sensory perception of the physical world is rich and multimodal and can flexibly integrate input from all five sensory modalities -- vision, touch, smell, hearing, and taste. However, in AI, attention has primarily focused on visual perception. In this talk, I will introduce my efforts in connecting vision with sound, which will allow machine perception systems to see objects and infer physics from multi-sensory data. In the first part of my talk, I will introduce a. self-supervised approach that could learn to parse images and separate the sound sources by watching and listening to unlabeled videos without requiring additional manual supervision. In the second part of my talk, I will show we may further infer the underlying causal structure in 3D environments through visual and auditory observations. This enables agents to seek the sound source of repeating environmental sound (e.g., alarm) or identify what object has fallen, and where, from an intermittent impact sound.

Human sensory perception of the physical world is rich and multimodal and can flexibly integrate input from all five sensory modalities -- vision, touch, smell, hearing, and taste. However, in AI, attention has primarily focused on visual perception. In this talk, I will introduce my efforts in connecting vision with sound, which will allow machine perception systems to see objects and infer physics from multi-sensory data. In the first part of my talk, I will introduce a. self-supervised approach that could learn to parse images and separate the sound sources by watching and listening to unlabeled videos without requiring additional manual supervision. In the second part of my talk, I will show we may further infer the underlying causal structure in 3D environments through visual and auditory observations. This enables agents to seek the sound source of repeating environmental sound (e.g., alarm) or identify what object has fallen, and where, from an intermittent impact sound.

-

- Date & Time: Wednesday, March 30, 2022; 11:00 AM EDT

Speaker: Vincent Sitzmann, MIT

Research Areas: Artificial Intelligence, Computer Vision, Machine Learning

Abstract  Given only a single picture, people are capable of inferring a mental representation that encodes rich information about the underlying 3D scene. We acquire this skill not through massive labeled datasets of 3D scenes, but through self-supervised observation and interaction. Building machines that can infer similarly rich neural scene representations is critical if they are to one day parallel people’s ability to understand, navigate, and interact with their surroundings. This poses a unique set of challenges that sets neural scene representations apart from conventional representations of 3D scenes: Rendering and processing operations need to be differentiable, and the type of information they encode is unknown a priori, requiring them to be extraordinarily flexible. At the same time, training them without ground-truth 3D supervision is an underdetermined problem, highlighting the need for structure and inductive biases without which models converge to spurious explanations.

Given only a single picture, people are capable of inferring a mental representation that encodes rich information about the underlying 3D scene. We acquire this skill not through massive labeled datasets of 3D scenes, but through self-supervised observation and interaction. Building machines that can infer similarly rich neural scene representations is critical if they are to one day parallel people’s ability to understand, navigate, and interact with their surroundings. This poses a unique set of challenges that sets neural scene representations apart from conventional representations of 3D scenes: Rendering and processing operations need to be differentiable, and the type of information they encode is unknown a priori, requiring them to be extraordinarily flexible. At the same time, training them without ground-truth 3D supervision is an underdetermined problem, highlighting the need for structure and inductive biases without which models converge to spurious explanations.

I will demonstrate how we can equip neural networks with inductive biases that enables them to learn 3D geometry, appearance, and even semantic information, self-supervised only from posed images. I will show how this approach unlocks the learning of priors, enabling 3D reconstruction from only a single posed 2D image, and how we may extend these representations to other modalities such as sound. I will then discuss recent work on learning the neural rendering operator to make rendering and training fast, and how this speed-up enables us to learn object-centric neural scene representations, learning to decompose 3D scenes into objects, given only images. Finally, I will talk about a recent application of self-supervised scene representation learning in robotic manipulation, where it enables us to learn to manipulate classes of objects in unseen poses from only a handful of human demonstrations.

-

- Date & Time: Thursday, December 9, 2021; 1:00pm - 5:30pm EST

Location: Virtual Event

Speaker: Prof. Melanie Zeilinger, ETH

Research Areas: Applied Physics, Artificial Intelligence, Communications, Computational Sensing, Computer Vision, Control, Data Analytics, Dynamical Systems, Electric Systems, Electronic and Photonic Devices, Machine Learning, Multi-Physical Modeling, Optimization, Robotics, Signal Processing, Speech & Audio, Digital Video, Human-Computer Interaction, Information Security

Brief - MERL is excited to announce the second keynote speaker for our Virtual Open House 2021:

Prof. Melanie Zeilinger from ETH .

Our virtual open house will take place on December 9, 2021, 1:00pm - 5:30pm (EST).

Join us to learn more about who we are, what we do, and discuss our internship and employment opportunities. Prof. Zeilinger's talk is scheduled for 3:15pm - 3:45pm (EST).

Registration: https://mailchi.mp/merl/merlvoh2021

Keynote Title: Control Meets Learning - On Performance, Safety and User Interaction

Abstract: With increasing sensing and communication capabilities, physical systems today are becoming one of the largest generators of data, making learning a central component of autonomous control systems. While this paradigm shift offers tremendous opportunities to address new levels of system complexity, variability and user interaction, it also raises fundamental questions of learning in a closed-loop dynamical control system. In this talk, I will present some of our recent results showing how even safety-critical systems can leverage the potential of data. I will first briefly present concepts for using learning for automatic controller design and for a new safety framework that can equip any learning-based controller with safety guarantees. The second part will then discuss how expert and user information can be utilized to optimize system performance, where I will particularly highlight an approach developed together with MERL for personalizing the motion planning in autonomous driving to the individual driving style of a passenger.

-

- Date & Time: Thursday, December 9, 2021; 1:00pm - 5:30pm EST

Location: Virtual Event

Speaker: Prof. Ashok Veeraraghavan, Rice University

Research Areas: Applied Physics, Artificial Intelligence, Communications, Computational Sensing, Computer Vision, Control, Data Analytics, Dynamical Systems, Electric Systems, Electronic and Photonic Devices, Machine Learning, Multi-Physical Modeling, Optimization, Robotics, Signal Processing, Speech & Audio, Digital Video, Human-Computer Interaction, Information Security

Brief - MERL is excited to announce the first keynote speaker for our Virtual Open House 2021:

Prof. Ashok Veeraraghavan from Rice University.

Our virtual open house will take place on December 9, 2021, 1:00pm - 5:30pm (EST).

Join us to learn more about who we are, what we do, and discuss our internship and employment opportunities. Prof. Veeraraghavan's talk is scheduled for 1:15pm - 1:45pm (EST).

Registration: https://mailchi.mp/merl/merlvoh2021

Keynote Title: Computational Imaging: Beyond the limits imposed by lenses.

Abstract: The lens has long been a central element of cameras, since its early use in the mid-nineteenth century by Niepce, Talbot, and Daguerre. The role of the lens, from the Daguerrotype to modern digital cameras, is to refract light to achieve a one-to-one mapping between a point in the scene and a point on the sensor. This effect enables the sensor to compute a particular two-dimensional (2D) integral of the incident 4D light-field. We propose a radical departure from this practice and the many limitations it imposes. In the talk we focus on two inter-related research projects that attempt to go beyond lens-based imaging.

First, we discuss our lab’s recent efforts to build flat, extremely thin imaging devices by replacing the lens in a conventional camera with an amplitude mask and computational reconstruction algorithms. These lensless cameras, called FlatCams can be less than a millimeter in thickness and enable applications where size, weight, thickness or cost are the driving factors. Second, we discuss high-resolution, long-distance imaging using Fourier Ptychography, where the need for a large aperture aberration corrected lens is replaced by a camera array and associated phase retrieval algorithms resulting again in order of magnitude reductions in size, weight and cost. Finally, I will spend a few minutes discussing how the wholistic computational imaging approach can be used to create ultra-high-resolution wavefront sensors.

-

- Date & Time: Thursday, December 9, 2021; 100pm-5:30pm (EST)

Location: Virtual Event

Research Areas: Applied Physics, Artificial Intelligence, Communications, Computational Sensing, Computer Vision, Control, Data Analytics, Dynamical Systems, Electric Systems, Electronic and Photonic Devices, Machine Learning, Multi-Physical Modeling, Optimization, Robotics, Signal Processing, Speech & Audio, Digital Video, Human-Computer Interaction, Information Security

Brief - Mitsubishi Electric Research Laboratories cordially invites you to join our Virtual Open House, on December 9, 2021, 1:00pm - 5:30pm (EST).

The event will feature keynotes, live sessions, research area booths, and time for open interactions with our researchers. Join us to learn more about who we are, what we do, and discuss our internship and employment opportunities.

Registration: https://mailchi.mp/merl/merlvoh2021

-

- Date & Time: Tuesday, November 2, 2021; 1:00 PM EST

Speaker: Dr. Hsiao-Yu (Fish) Tung, MIT BCS

Research Areas: Artificial Intelligence, Computer Vision, Machine Learning, Robotics

Abstract  Current state-of-the-art CNNs can localize and name objects in internet photos, yet, they miss the basic knowledge that a two-year-old toddler has possessed: objects persist over time despite changes in the observer’s viewpoint or during cross-object occlusions; objects have 3D extent; solid objects do not pass through each other. In this talk, I will introduce neural architectures that learn to parse video streams of a static scene into world-centric 3D feature maps by disentangling camera motion from scene appearance. I will show the proposed architectures learn object permanence, can imagine RGB views from novel viewpoints in truly novel scenes, can conduct basic spatial reasoning and planning, can infer affordability in sentences, and can learn geometry-aware 3D concepts that allow pose-aware object recognition to happen with weak/sparse labels. Our experiments suggest that the proposed architectures are essential for the models to generalize across objects and locations, and it overcomes many limitations of 2D CNNs. I will show how we can use the proposed 3D representations to build machine perception and physical understanding more close to humans.

Current state-of-the-art CNNs can localize and name objects in internet photos, yet, they miss the basic knowledge that a two-year-old toddler has possessed: objects persist over time despite changes in the observer’s viewpoint or during cross-object occlusions; objects have 3D extent; solid objects do not pass through each other. In this talk, I will introduce neural architectures that learn to parse video streams of a static scene into world-centric 3D feature maps by disentangling camera motion from scene appearance. I will show the proposed architectures learn object permanence, can imagine RGB views from novel viewpoints in truly novel scenes, can conduct basic spatial reasoning and planning, can infer affordability in sentences, and can learn geometry-aware 3D concepts that allow pose-aware object recognition to happen with weak/sparse labels. Our experiments suggest that the proposed architectures are essential for the models to generalize across objects and locations, and it overcomes many limitations of 2D CNNs. I will show how we can use the proposed 3D representations to build machine perception and physical understanding more close to humans.

-

- Date & Time: Tuesday, September 28, 2021; 1:00 PM EST

Speaker: Dr. Ruohan Gao, Stanford University

MERL Host: Gordon Wichern

Research Areas: Computer Vision, Machine Learning, Speech & Audio

Abstract  While computer vision has made significant progress by "looking" — detecting objects, actions, or people based on their appearance — it often does not listen. Yet cognitive science tells us that perception develops by making use of all our senses without intensive supervision. Towards this goal, in this talk I will present my research on audio-visual learning — We disentangle object sounds from unlabeled video, use audio as an efficient preview for action recognition in untrimmed video, decode the monaural soundtrack into its binaural counterpart by injecting visual spatial information, and use echoes to interact with the environment for spatial image representation learning. Together, these are steps towards multimodal understanding of the visual world, where audio serves as both the semantic and spatial signals. In the end, I will also briefly talk about our latest work on multisensory learning for robotics.

While computer vision has made significant progress by "looking" — detecting objects, actions, or people based on their appearance — it often does not listen. Yet cognitive science tells us that perception develops by making use of all our senses without intensive supervision. Towards this goal, in this talk I will present my research on audio-visual learning — We disentangle object sounds from unlabeled video, use audio as an efficient preview for action recognition in untrimmed video, decode the monaural soundtrack into its binaural counterpart by injecting visual spatial information, and use echoes to interact with the environment for spatial image representation learning. Together, these are steps towards multimodal understanding of the visual world, where audio serves as both the semantic and spatial signals. In the end, I will also briefly talk about our latest work on multisensory learning for robotics.

-

- Date & Time: Wednesday, December 9, 2020; 1:00-5:00PM EST

Location: Virtual

MERL Contacts: Elizabeth Phillips; Anthony Vetro

Research Areas: Applied Physics, Artificial Intelligence, Communications, Computational Sensing, Computer Vision, Control, Data Analytics, Dynamical Systems, Electric Systems, Electronic and Photonic Devices, Machine Learning, Multi-Physical Modeling, Optimization, Robotics, Signal Processing, Speech & Audio

-

- Date: Wednesday, September 26, 2018 - Friday, September 28, 2018

Location: Houston, Texas

MERL Contacts: Chiori Hori; Elizabeth Phillips

Research Areas: Artificial Intelligence, Computer Vision, Machine Learning

Brief - "MERL, in partnership with Mitsubishi Electric was a Gold Sponsor of the Grace Hopper Celebration 2018 (GHC18) held in Houston, TX on September 26-28th. Presented by AnitaB.org and the Association for Computing Machinery, this is world's largest gathering of women technologists. Chiori Hori and Elizabeth Phillips from MERL, and Yoshiyuki Umei, Jared Baker and Lien Randle from MEUS, proudly represented Mitsubishi Electric at the recruiting expo, that drew over 20,000 female technologists this year.

-

- Date & Time: Thursday, November 29, 2018; 4-6pm

Location: 201 Broadway, 8th floor, Cambridge, MA

MERL Contacts: Elizabeth Phillips; Anthony Vetro

Research Areas: Applied Physics, Artificial Intelligence, Communications, Computational Sensing, Computer Vision, Control, Data Analytics, Dynamical Systems, Electric Systems, Electronic and Photonic Devices, Machine Learning, Multi-Physical Modeling, Optimization, Robotics, Signal Processing, Speech & Audio

Brief - Snacks, demos, science: On Thursday 11/29, Mitsubishi Electric Research Labs (MERL) will host an open house for graduate+ students interested in internships, post-docs, and research scientist positions. The event will be held from 4-6pm and will feature demos & short presentations in our main areas of research including artificial intelligence, robotics, computer vision, speech processing, optimization, machine learning, data analytics, signal processing, communications, sensing, control and dynamical systems, as well as multi-physyical modeling and electronic devices. MERL is a high impact publication-oriented research lab with very extensive internship and university collaboration programs. Most internships lead to publication; many of our interns and staff have gone on to notable careers at MERL and in academia. Come mix with our researchers, see our state of the art technologies, and learn about our research opportunities. Dress code: casual, with resumes.

Pre-registration for the event is strongly encouraged:

merlopenhouse.eventbrite.com

Current internship and employment openings:

www.merl.com/internship/openings

www.merl.com/employment/employment

Information about working at MERL:

www.merl.com/employment.

-

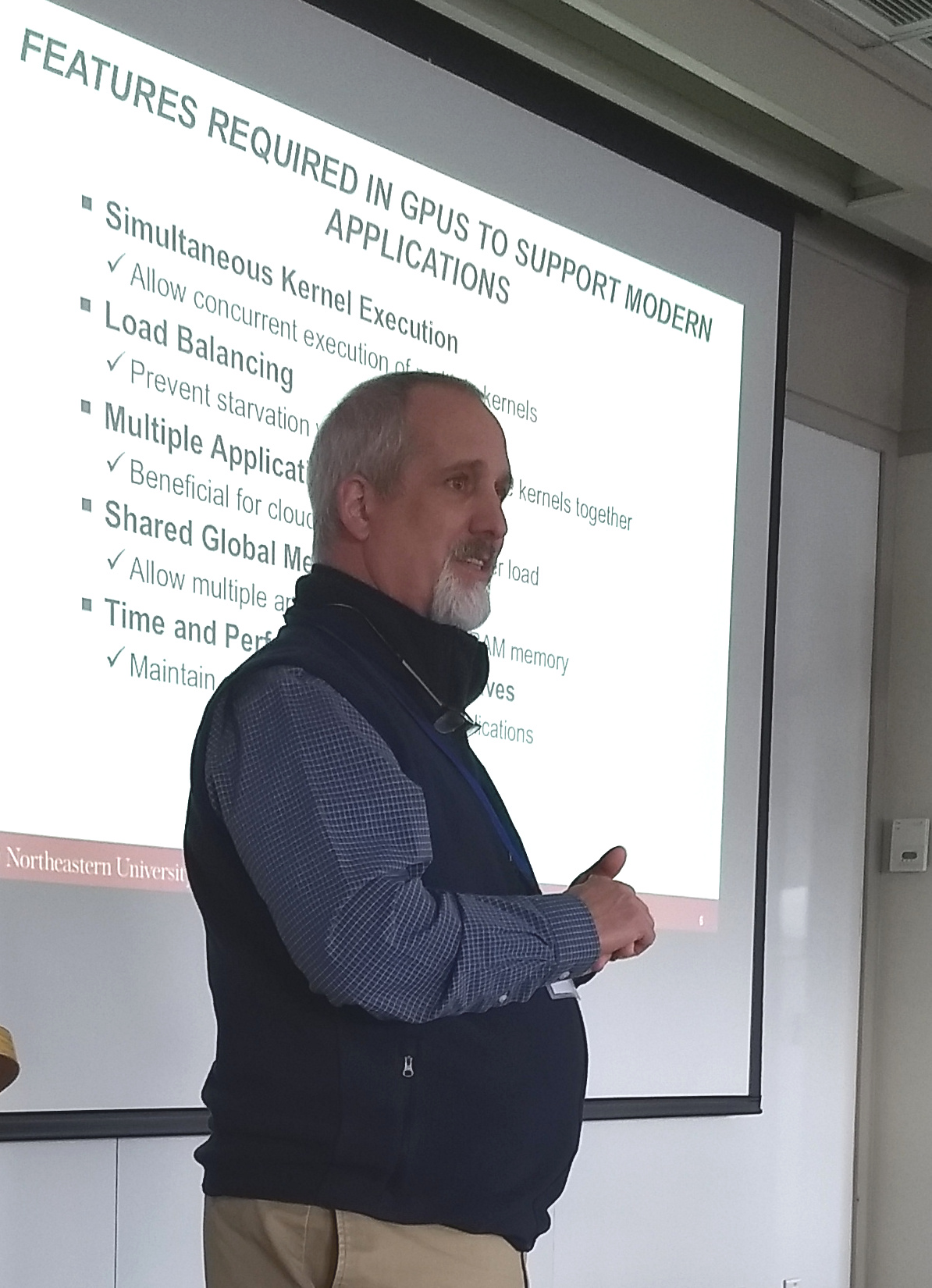

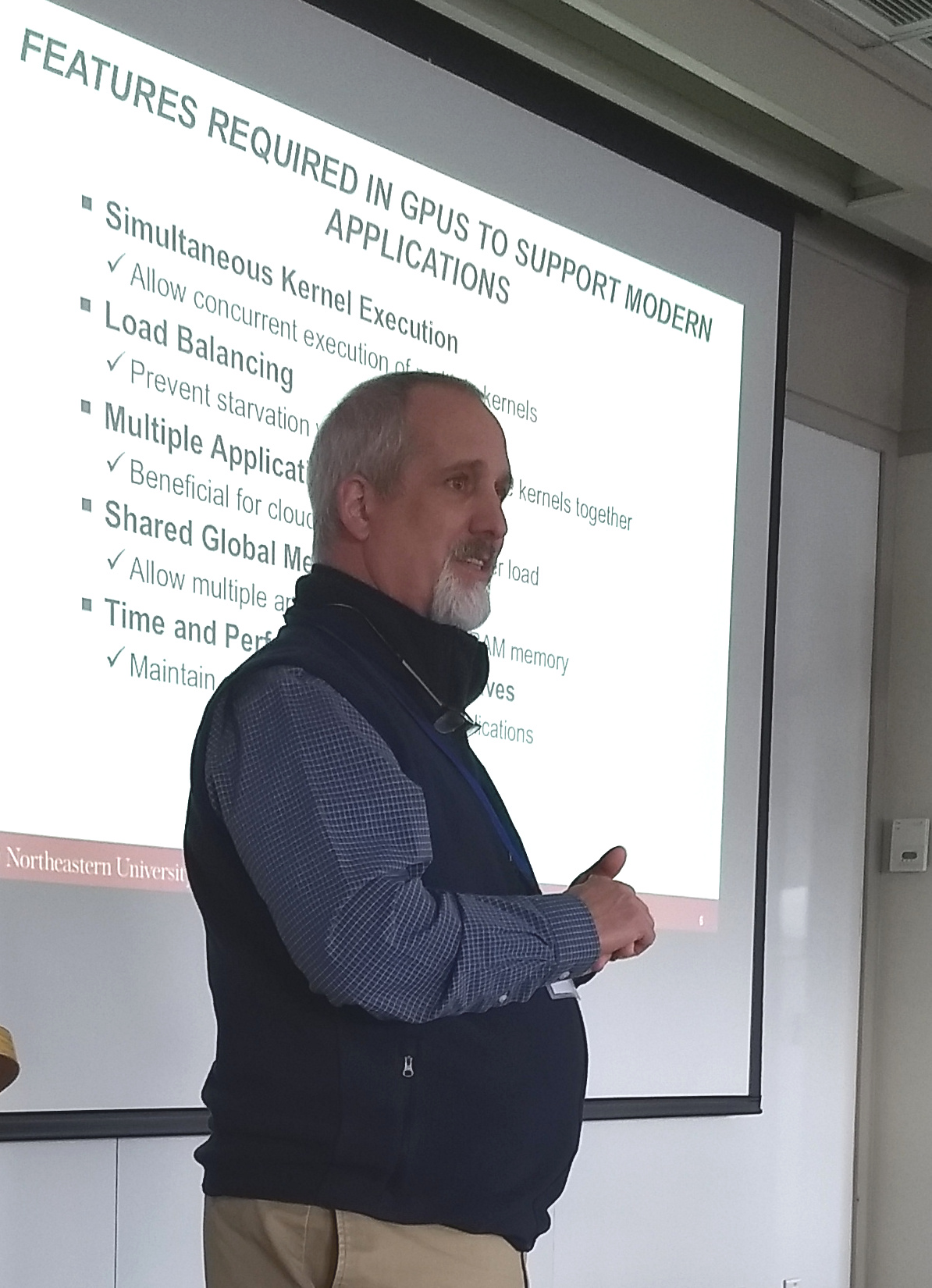

- Date & Time: Friday, February 2, 2018; 12:00

Speaker: Dr. David Kaeli, Northeastern University

MERL Host: Abraham Goldsmith

Research Areas: Control, Optimization, Machine Learning, Speech & Audio

Abstract  GPU computing is alive and well! The GPU has allowed researchers to overcome a number of computational barriers in important problem domains. But still, there remain challenges to use a GPU to target more general purpose applications. GPUs achieve impressive speedups when compared to CPUs, since GPUs have a large number of compute cores and high memory bandwidth. Recent GPU performance is approaching 10 teraflops of single precision performance on a single device. In this talk we will discuss current trends with GPUs, including some advanced features that allow them exploit multi-context grains of parallelism. Further, we consider how GPUs can be treated as cloud-based resources, enabling a GPU-enabled server to deliver HPC cloud services by leveraging virtualization and collaborative filtering. Finally, we argue for for new heterogeneous workloads and discuss the role of the Heterogeneous Systems Architecture (HSA), a standard that further supports integration of the CPU and GPU into a common framework. We present a new class of benchmarks specifically tailored to evaluate the benefits of features supported in the new HSA programming model.

GPU computing is alive and well! The GPU has allowed researchers to overcome a number of computational barriers in important problem domains. But still, there remain challenges to use a GPU to target more general purpose applications. GPUs achieve impressive speedups when compared to CPUs, since GPUs have a large number of compute cores and high memory bandwidth. Recent GPU performance is approaching 10 teraflops of single precision performance on a single device. In this talk we will discuss current trends with GPUs, including some advanced features that allow them exploit multi-context grains of parallelism. Further, we consider how GPUs can be treated as cloud-based resources, enabling a GPU-enabled server to deliver HPC cloud services by leveraging virtualization and collaborative filtering. Finally, we argue for for new heterogeneous workloads and discuss the role of the Heterogeneous Systems Architecture (HSA), a standard that further supports integration of the CPU and GPU into a common framework. We present a new class of benchmarks specifically tailored to evaluate the benefits of features supported in the new HSA programming model.

-

- Date & Time: Thursday, November 30, 2017; 4-6pm

Location: 201 Broadway, 8th floor, Cambridge, MA

MERL Contacts: Elizabeth Phillips; Anthony Vetro Brief - Snacks, demos, science: On Thursday 11/30, Mitsubishi Electric Research Labs (MERL) will host an open house for graduate+ students interested in internships, post-docs, and research scientist positions. The event will be held from 4-6pm and will feature demos & short presentations in our main areas of research: algorithms, multimedia, electronics, communications, computer vision, speech processing, optimization, machine learning, data analytics, mechatronics, dynamics, control, and robotics. MERL is a high impact publication-oriented research lab with very extensive internship and university collaboration programs. Most internships lead to publication; many of our interns and staff have gone on to notable careers at MERL and in academia. Come mix with our researchers, see our state of the art technologies, and learn about our research opportunities. Dress code: casual, with resumes.

Pre-registration for the event is strongly encouraged:

https://merlopenhouse2.eventbrite.com/

Current internship and employment openings:

http://www.merl.com/internship/openings

http://www.merl.com/employment/employment.

-

- Date: Thursday, June 1, 2017

Location: IEEE Conference on Automatic Face and Gesture Recognition (FG 2017), Washington, DC

Speaker: Tim K. Marks

MERL Contact: Tim K. Marks

Research Area: Machine Learning

Brief - MERL Senior Principal Research Scientist Tim K. Marks will give the invited lunch talk on Thursday, June 1, at the IEEE International Conference on Automatic Face and Gesture Recognition (FG 2017). The talk is entitled "Robust Real-Time 3D Head Pose and 2D Face Alignment.".

-

- Date & Time: Monday, July 10, 2017; 6:15 PM - 7:15 PM

Location: David Lawrence Convention Center, Pittsburgh PA

Speaker: Andrew Knyazev and other panelists, MERL Brief - Andrew Knyazev accepted an invitation to represent MERL at the panel on Student Careers in Business, Industry and Government at the annual meeting of the Society for Industrial and Applied Mathematics (SIAM).

The format consists of a five minute introduction by each of the panelists covering their background and an overview of the mathematical and computational challenges at their organization. The introductions will be followed by questions from the students.

-

- Date & Time: Tuesday, March 28, 2017; 1:30 - 5:30PM

Location: Google (355 Main St., 5th Floor, Cambridge MA)

MERL Contacts: Daniel N. Nikovski; Anthony Vetro; Richard C. (Dick) Waters; Jinyun Zhang Brief - How will AI and robotics reshape the economy and create new opportunities (and challenges) across industries? Who are the hottest companies that will compete with the likes of Google, Amazon, and Uber to create the future? And what are New England innovators doing to strengthen the local cluster and help lead the national discussion?

MERL will be participating in Xconomy's third annual conference on AI and robotics in Boston to address these questions. MERL President & CEO, Dick Waters, will be on a panel discussing the status and future of self-driving vehicles. Lab members will also be on hand demonstrate and discuss recent advances AI and robotics technology.

The agenda and registration for the event can be found online: https://xconomyforum85.eventbrite.com.

-

Recent years have seen a significant surge in complex AI systems for competitive programming, capable of performing at admirable levels against human competitors. While steady progress has been made, the highest percentiles still remain out of reach for these methods on standard competition platforms such as Codeforces. In this talk, I will describe and dive into our recent work, where we focussed on combinatorial competitive programming. In combinatorial challenges, the target is to find as-good-as-possible solutions to otherwise computationally intractable problems, over specific given inputs. We hypothesise that this scenario offers a unique testbed for human-AI synergy, as human programmers can write a backbone of a heuristic solution, after which AI can be used to optimise the scoring function used by the heuristic. We deploy our approach on previous iterations of Hash Code, a global team programming competition inspired by NP-hard software engineering problems at Google, and we leverage FunSearch to evolve our scoring functions. Our evolved solutions significantly improve the attained scores from their baseline, successfully breaking into the top percentile on all previous Hash Code online qualification rounds, and outperforming the top human teams on several. To the best of our knowledge, this is the first known AI-assisted top-tier result in competitive programming.

Recent years have seen a significant surge in complex AI systems for competitive programming, capable of performing at admirable levels against human competitors. While steady progress has been made, the highest percentiles still remain out of reach for these methods on standard competition platforms such as Codeforces. In this talk, I will describe and dive into our recent work, where we focussed on combinatorial competitive programming. In combinatorial challenges, the target is to find as-good-as-possible solutions to otherwise computationally intractable problems, over specific given inputs. We hypothesise that this scenario offers a unique testbed for human-AI synergy, as human programmers can write a backbone of a heuristic solution, after which AI can be used to optimise the scoring function used by the heuristic. We deploy our approach on previous iterations of Hash Code, a global team programming competition inspired by NP-hard software engineering problems at Google, and we leverage FunSearch to evolve our scoring functions. Our evolved solutions significantly improve the attained scores from their baseline, successfully breaking into the top percentile on all previous Hash Code online qualification rounds, and outperforming the top human teams on several. To the best of our knowledge, this is the first known AI-assisted top-tier result in competitive programming. The observed timescales of the universe span from the exasecond scale (~1e18 seconds) down to the zeptosecond scale (~1e-21 seconds). While specialized imaging systems can capture narrow slices of this temporal spectrum in the ultra-fast regime (e.g., nanoseconds to picoseconds; 1e-9 to 1e-12 s), they cannot simultaneously capture both slow (> 1 second) and ultra-fast events (< 1 nanosecond). Further, ultra-fast imaging systems are conventionally limited to single-viewpoint capture, hindering 3D visualization at ultra-fast timescales. In this talk, I discuss (1) new computational algorithms that turn a single-photon detector into an "ultra-wideband" imaging system that captures events from seconds to picoseconds; and (2) a method for neural rendering using multi-viewpoint, ultra-fast videos captured using single-photon detectors. The latter approach enables rendering videos of propagating light from novel viewpoints, observation of viewpoint-dependent changes in light transport predicted by Einstein, recovery of material properties, and accurate 3D reconstruction from multiply scattered light. Finally, I discuss future directions in ultra-wideband imaging.

The observed timescales of the universe span from the exasecond scale (~1e18 seconds) down to the zeptosecond scale (~1e-21 seconds). While specialized imaging systems can capture narrow slices of this temporal spectrum in the ultra-fast regime (e.g., nanoseconds to picoseconds; 1e-9 to 1e-12 s), they cannot simultaneously capture both slow (> 1 second) and ultra-fast events (< 1 nanosecond). Further, ultra-fast imaging systems are conventionally limited to single-viewpoint capture, hindering 3D visualization at ultra-fast timescales. In this talk, I discuss (1) new computational algorithms that turn a single-photon detector into an "ultra-wideband" imaging system that captures events from seconds to picoseconds; and (2) a method for neural rendering using multi-viewpoint, ultra-fast videos captured using single-photon detectors. The latter approach enables rendering videos of propagating light from novel viewpoints, observation of viewpoint-dependent changes in light transport predicted by Einstein, recovery of material properties, and accurate 3D reconstruction from multiply scattered light. Finally, I discuss future directions in ultra-wideband imaging. Harvesting labor is the single largest cost in apple production in the U.S. Surging cost and growing shortage of labor has forced the apple industry to seek automated harvesting solutions. Despite considerable progress in recent years, the existing robotic harvesting systems still fall short of performance expectations, lacking robustness and proving inefficient or overly complex for practical commercial deployment. In this talk, I will present the development and evaluation of a new dual-arm robotic apple harvesting system. This work is a result of a continuous collaboration between Michigan State University and U.S. Department of Agriculture.

Harvesting labor is the single largest cost in apple production in the U.S. Surging cost and growing shortage of labor has forced the apple industry to seek automated harvesting solutions. Despite considerable progress in recent years, the existing robotic harvesting systems still fall short of performance expectations, lacking robustness and proving inefficient or overly complex for practical commercial deployment. In this talk, I will present the development and evaluation of a new dual-arm robotic apple harvesting system. This work is a result of a continuous collaboration between Michigan State University and U.S. Department of Agriculture. I will survey a current, heated debate in the AI research community on whether large pre-trained language models can be said to "understand" language -- and the physical and social situations language encodes -- in any important sense. I will describe arguments that have been made for and against such understanding, and, more generally, will discuss what methods can be used to fairly evaluate understanding and intelligence in AI systems. I will conclude with key questions for the broader sciences of intelligence that have arisen in light of these discussions.

I will survey a current, heated debate in the AI research community on whether large pre-trained language models can be said to "understand" language -- and the physical and social situations language encodes -- in any important sense. I will describe arguments that have been made for and against such understanding, and, more generally, will discuss what methods can be used to fairly evaluate understanding and intelligence in AI systems. I will conclude with key questions for the broader sciences of intelligence that have arisen in light of these discussions. Imaging in low light settings is extremely challenging due to low photon counts, both in photography and in microscopy. In photography, imaging under low light, high gain settings often results in highly structured, non-Gaussian sensor noise that’s hard to characterize or denoise. In this talk, we address this by developing a GAN-tuned physics-based noise model to more accurately represent camera noise at the lowest light, and highest gain settings. Using this noise model, we train a video denoiser using synthetic data and demonstrate photorealistic videography at starlight (submillilux levels of illumination) for the first time.

Imaging in low light settings is extremely challenging due to low photon counts, both in photography and in microscopy. In photography, imaging under low light, high gain settings often results in highly structured, non-Gaussian sensor noise that’s hard to characterize or denoise. In this talk, we address this by developing a GAN-tuned physics-based noise model to more accurately represent camera noise at the lowest light, and highest gain settings. Using this noise model, we train a video denoiser using synthetic data and demonstrate photorealistic videography at starlight (submillilux levels of illumination) for the first time.  Building General Purpose Vision Systems (GPVs) that can perform a huge variety of tasks has been a long-standing goal for the computer vision community. However, end-to-end training of these systems to handle different modalities and tasks has proven to be extremely challenging. In this talk, I will describe a lucrative neuro-symbolic alternative to the common end-to-end learning paradigm called Visual Programming. Visual Programming is a general framework that leverages the code-generation abilities of LLMs, existing neural models, and non-differentiable programs to enable powerful applications. Some of these applications continue to remain elusive for the current generation of end-to-end trained GPVs.

Building General Purpose Vision Systems (GPVs) that can perform a huge variety of tasks has been a long-standing goal for the computer vision community. However, end-to-end training of these systems to handle different modalities and tasks has proven to be extremely challenging. In this talk, I will describe a lucrative neuro-symbolic alternative to the common end-to-end learning paradigm called Visual Programming. Visual Programming is a general framework that leverages the code-generation abilities of LLMs, existing neural models, and non-differentiable programs to enable powerful applications. Some of these applications continue to remain elusive for the current generation of end-to-end trained GPVs. In this talk, I will discuss our recent research on understanding post-hoc interpretability. I will begin by introducing a characterization of post-hoc interpretability methods as local function approximators, and the implications of this viewpoint, including a no-free-lunch theorem for explanations. Next, we shall challenge the assumption that post-hoc explanations provide information about a model's discriminative capabilities p(y|x) and instead demonstrate that many common methods instead rely on a conditional generative model p(x|y). This observation underscores the importance of being cautious when using such methods in practice. Finally, I will propose to resolve this via regularization of model structure, specifically by training low curvature neural networks, resulting in improved model robustness and stable gradients.

In this talk, I will discuss our recent research on understanding post-hoc interpretability. I will begin by introducing a characterization of post-hoc interpretability methods as local function approximators, and the implications of this viewpoint, including a no-free-lunch theorem for explanations. Next, we shall challenge the assumption that post-hoc explanations provide information about a model's discriminative capabilities p(y|x) and instead demonstrate that many common methods instead rely on a conditional generative model p(x|y). This observation underscores the importance of being cautious when using such methods in practice. Finally, I will propose to resolve this via regularization of model structure, specifically by training low curvature neural networks, resulting in improved model robustness and stable gradients. The visual world has its inherent structure: scenes are made of multiple identical objects; different objects may have the same color or material, with a regular layout; each object can be symmetric and have repetitive parts. How can we infer, represent, and use such structure from raw data, without hampering the expressiveness of neural networks? In this talk, I will demonstrate that such structure, or code, can be learned from natural supervision. Here, natural supervision can be from pixels, where neuro-symbolic methods automatically discover repetitive parts and objects for scene synthesis. It can also be from objects, where humans during fabrication introduce priors that can be leveraged by machines to infer regular intrinsics such as texture and material. When solving these problems, structured representations and neural nets play complementary roles: it is more data-efficient to learn with structured representations, and they generalize better to new scenarios with robustly captured high-level information; neural nets effectively extract complex, low-level features from cluttered and noisy visual data.

The visual world has its inherent structure: scenes are made of multiple identical objects; different objects may have the same color or material, with a regular layout; each object can be symmetric and have repetitive parts. How can we infer, represent, and use such structure from raw data, without hampering the expressiveness of neural networks? In this talk, I will demonstrate that such structure, or code, can be learned from natural supervision. Here, natural supervision can be from pixels, where neuro-symbolic methods automatically discover repetitive parts and objects for scene synthesis. It can also be from objects, where humans during fabrication introduce priors that can be leveraged by machines to infer regular intrinsics such as texture and material. When solving these problems, structured representations and neural nets play complementary roles: it is more data-efficient to learn with structured representations, and they generalize better to new scenarios with robustly captured high-level information; neural nets effectively extract complex, low-level features from cluttered and noisy visual data. Human sensory perception of the physical world is rich and multimodal and can flexibly integrate input from all five sensory modalities -- vision, touch, smell, hearing, and taste. However, in AI, attention has primarily focused on visual perception. In this talk, I will introduce my efforts in connecting vision with sound, which will allow machine perception systems to see objects and infer physics from multi-sensory data. In the first part of my talk, I will introduce a. self-supervised approach that could learn to parse images and separate the sound sources by watching and listening to unlabeled videos without requiring additional manual supervision. In the second part of my talk, I will show we may further infer the underlying causal structure in 3D environments through visual and auditory observations. This enables agents to seek the sound source of repeating environmental sound (e.g., alarm) or identify what object has fallen, and where, from an intermittent impact sound.

Human sensory perception of the physical world is rich and multimodal and can flexibly integrate input from all five sensory modalities -- vision, touch, smell, hearing, and taste. However, in AI, attention has primarily focused on visual perception. In this talk, I will introduce my efforts in connecting vision with sound, which will allow machine perception systems to see objects and infer physics from multi-sensory data. In the first part of my talk, I will introduce a. self-supervised approach that could learn to parse images and separate the sound sources by watching and listening to unlabeled videos without requiring additional manual supervision. In the second part of my talk, I will show we may further infer the underlying causal structure in 3D environments through visual and auditory observations. This enables agents to seek the sound source of repeating environmental sound (e.g., alarm) or identify what object has fallen, and where, from an intermittent impact sound. Given only a single picture, people are capable of inferring a mental representation that encodes rich information about the underlying 3D scene. We acquire this skill not through massive labeled datasets of 3D scenes, but through self-supervised observation and interaction. Building machines that can infer similarly rich neural scene representations is critical if they are to one day parallel people’s ability to understand, navigate, and interact with their surroundings. This poses a unique set of challenges that sets neural scene representations apart from conventional representations of 3D scenes: Rendering and processing operations need to be differentiable, and the type of information they encode is unknown a priori, requiring them to be extraordinarily flexible. At the same time, training them without ground-truth 3D supervision is an underdetermined problem, highlighting the need for structure and inductive biases without which models converge to spurious explanations.

Given only a single picture, people are capable of inferring a mental representation that encodes rich information about the underlying 3D scene. We acquire this skill not through massive labeled datasets of 3D scenes, but through self-supervised observation and interaction. Building machines that can infer similarly rich neural scene representations is critical if they are to one day parallel people’s ability to understand, navigate, and interact with their surroundings. This poses a unique set of challenges that sets neural scene representations apart from conventional representations of 3D scenes: Rendering and processing operations need to be differentiable, and the type of information they encode is unknown a priori, requiring them to be extraordinarily flexible. At the same time, training them without ground-truth 3D supervision is an underdetermined problem, highlighting the need for structure and inductive biases without which models converge to spurious explanations. Current state-of-the-art CNNs can localize and name objects in internet photos, yet, they miss the basic knowledge that a two-year-old toddler has possessed: objects persist over time despite changes in the observer’s viewpoint or during cross-object occlusions; objects have 3D extent; solid objects do not pass through each other. In this talk, I will introduce neural architectures that learn to parse video streams of a static scene into world-centric 3D feature maps by disentangling camera motion from scene appearance. I will show the proposed architectures learn object permanence, can imagine RGB views from novel viewpoints in truly novel scenes, can conduct basic spatial reasoning and planning, can infer affordability in sentences, and can learn geometry-aware 3D concepts that allow pose-aware object recognition to happen with weak/sparse labels. Our experiments suggest that the proposed architectures are essential for the models to generalize across objects and locations, and it overcomes many limitations of 2D CNNs. I will show how we can use the proposed 3D representations to build machine perception and physical understanding more close to humans.

Current state-of-the-art CNNs can localize and name objects in internet photos, yet, they miss the basic knowledge that a two-year-old toddler has possessed: objects persist over time despite changes in the observer’s viewpoint or during cross-object occlusions; objects have 3D extent; solid objects do not pass through each other. In this talk, I will introduce neural architectures that learn to parse video streams of a static scene into world-centric 3D feature maps by disentangling camera motion from scene appearance. I will show the proposed architectures learn object permanence, can imagine RGB views from novel viewpoints in truly novel scenes, can conduct basic spatial reasoning and planning, can infer affordability in sentences, and can learn geometry-aware 3D concepts that allow pose-aware object recognition to happen with weak/sparse labels. Our experiments suggest that the proposed architectures are essential for the models to generalize across objects and locations, and it overcomes many limitations of 2D CNNs. I will show how we can use the proposed 3D representations to build machine perception and physical understanding more close to humans. While computer vision has made significant progress by "looking" — detecting objects, actions, or people based on their appearance — it often does not listen. Yet cognitive science tells us that perception develops by making use of all our senses without intensive supervision. Towards this goal, in this talk I will present my research on audio-visual learning — We disentangle object sounds from unlabeled video, use audio as an efficient preview for action recognition in untrimmed video, decode the monaural soundtrack into its binaural counterpart by injecting visual spatial information, and use echoes to interact with the environment for spatial image representation learning. Together, these are steps towards multimodal understanding of the visual world, where audio serves as both the semantic and spatial signals. In the end, I will also briefly talk about our latest work on multisensory learning for robotics.

While computer vision has made significant progress by "looking" — detecting objects, actions, or people based on their appearance — it often does not listen. Yet cognitive science tells us that perception develops by making use of all our senses without intensive supervision. Towards this goal, in this talk I will present my research on audio-visual learning — We disentangle object sounds from unlabeled video, use audio as an efficient preview for action recognition in untrimmed video, decode the monaural soundtrack into its binaural counterpart by injecting visual spatial information, and use echoes to interact with the environment for spatial image representation learning. Together, these are steps towards multimodal understanding of the visual world, where audio serves as both the semantic and spatial signals. In the end, I will also briefly talk about our latest work on multisensory learning for robotics. GPU computing is alive and well! The GPU has allowed researchers to overcome a number of computational barriers in important problem domains. But still, there remain challenges to use a GPU to target more general purpose applications. GPUs achieve impressive speedups when compared to CPUs, since GPUs have a large number of compute cores and high memory bandwidth. Recent GPU performance is approaching 10 teraflops of single precision performance on a single device. In this talk we will discuss current trends with GPUs, including some advanced features that allow them exploit multi-context grains of parallelism. Further, we consider how GPUs can be treated as cloud-based resources, enabling a GPU-enabled server to deliver HPC cloud services by leveraging virtualization and collaborative filtering. Finally, we argue for for new heterogeneous workloads and discuss the role of the Heterogeneous Systems Architecture (HSA), a standard that further supports integration of the CPU and GPU into a common framework. We present a new class of benchmarks specifically tailored to evaluate the benefits of features supported in the new HSA programming model.

GPU computing is alive and well! The GPU has allowed researchers to overcome a number of computational barriers in important problem domains. But still, there remain challenges to use a GPU to target more general purpose applications. GPUs achieve impressive speedups when compared to CPUs, since GPUs have a large number of compute cores and high memory bandwidth. Recent GPU performance is approaching 10 teraflops of single precision performance on a single device. In this talk we will discuss current trends with GPUs, including some advanced features that allow them exploit multi-context grains of parallelism. Further, we consider how GPUs can be treated as cloud-based resources, enabling a GPU-enabled server to deliver HPC cloud services by leveraging virtualization and collaborative filtering. Finally, we argue for for new heterogeneous workloads and discuss the role of the Heterogeneous Systems Architecture (HSA), a standard that further supports integration of the CPU and GPU into a common framework. We present a new class of benchmarks specifically tailored to evaluate the benefits of features supported in the new HSA programming model.